In Part 3 we learned a number of ways to scale storage performance for a single VM including but not limited too:

- Using multiple PVSCSI controllers

- Using multiple virtual disks

- Spreading large workloads (like databases) across multiple vDisks/Controllers

- Increasing the CVMs vCPUs and/or vRAM

- Adding storage only nodes

- Using Acropolis Block Services (ABS)

Now here at Nutanix, especially in the Solutions/Performance engineering team we’re never satisfied and we’re always pushing for more efficiency which leads to greater performance.

A colleague of mine, Michael Webster (NPX#007 and VCDX#66) was a key part of the team who designed and developed what is now known as “Volume Group Load Balancer” or VG LB for short.

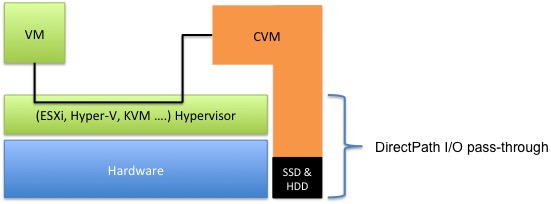

Volume Group Load Balancer is an Acropolis Hypervisor (AHV) only capability which combines the IO path efficiencies of AHV Turbo Mode with the benefits of the Acropolis Distributed Storage Fabric (ADSF) to create a more simplified and dynamic version of Acropolis Block Services (ABS).

One major advantage of VG LB over ABS is it’s simplicity.

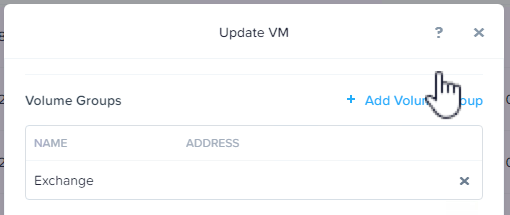

There is no requirement for in-guest iSCSI which removes the potential for driver and configuration issues and VG LB is configured through PRISM UI by using the update VM option making it a breeze to setup.

The only complexity with VG LB currently is to enable the load balancing functionality, it needs to be applied at the Acropolis CLI (acli) using the following command:

acli vg.update Insert_vg_name_here load_balance_vm_attachments=true

In the event you do not wish all Controller VMs to provide IO for VG LB, one or more CVMs can be excluded from load balancing. However I recommend leaving the cluster to sort itself out as the Acropolis Dynamic Scheduler (ADS) will move virtual disk sessions if CVM contention is discovered.

iSCSI sessions are also dynamically balanced as workload on individual CVMs exceed 85% to ensure hot spots are quickly alleviated which is another reason why CVMs should not be excluded as you are likely constraining performance for the VG LB VM unnecessarily.

VG LB is how Nutanix has achieved >1 MILLION 8k random read IOPS at just 0.11ms latency from a single VM as shown below.

#Nutanix delivering 1 million IOPS in a single VM at 8k block size and only 0.11ms latency puts to bed the In-kernel vs Controller VM debate.#VSAN #VMware #HCI pic.twitter.com/afkq1cC0e3

— Josh Odgers (@josh_odgers) November 9, 2017

This was achieved using just a 10 node cluster, imagine what can be achieved when you scale out the cluster further.

A Frequently asked question relating to high performance VMs is what happens when you vMotion?

The link above shows this in detail including a YouTube demonstration, but in short the IO dropped below 1 million IOPS for approx 3 seconds during the vMotion with the lowest value recorded at 956k IOPS. I’d say an approx 10% drop for 3 seconds is pretty reasonable as the performance drop is caused by the migration stunning the VM and not by the underlying storage.

The next question is “What about mixed read/write workloads?”

Again the link above shows this in detail including a YouTube demonstration, but at this stage you’re probably not surprised that this result shows a maximum starting baseline of 436K random read and 187k random write IOPS and immediately following the migration performance reduced to 359k read and 164k write IOPS before achieving greater performance than the original baseline @ 446k read and 192k IOPS within a few seconds.

So not only can Nutanix VG LB achieve fantastic performance, it can do so during normal day to day operations such as VM live migrations.

The VG LB capability is unique to Nutanix and is only achievable thanks to the true Distributed Storage Fabric.

With Nutanix highly scalable software defined storage and the unique capabilities like storage only nodes, AHV Turbo and VG LB, the question “Why?” seriously needs to be asked of anyone recommending a SAN.

I’d appreciate any constructive questions/comments on use cases which you believe Nutanix cannot handle and I’ll follow up with a blog post explaining how it can be done, or I’ll confirm if it’s not currently supported/recommended.

Summary:

Part 3 has taught us that Nutanix provides excellent scalability for Virtual Machines and provides ABS for niche workloads which may require more performance than a single node can offer while Part 4 explains how Nutanix’ next generation hypervisor (AHV) provides further enhanced and simplified performance for monster VMs with Volume Group Load Balancing leveraging Turbo Mode.

Back to the Scalability, Resiliency and Performance Index.