Part 1 will focus on CPU sizing for Exchange Mailbox (MBX) or Multi Server Role (MSR) deployments.

The Exchange 2013 Server Role Requirements Calculator v6.6 should be used to size the VM to ensure sufficient performance for the specific Exchange deployment.

One key input for the calculator is the “SpecInt2006 Rate Value” which can be found on the “Input” tab of the calculator (shown below).

To find the SpecInt2006 Rate Value for your specific CPU, I recommend using the Exchange Processor Query Tool which allows you to enter the Processor Model number of your servers and query the Spec.org database for the rating of your CPU.

Note: This tool is applicable to Exchange 2010 and 2013 deployments despite the tool being titled “Exchange 2010 Processor Query Tool”.

To do this, enter the model number of your CPU (example E5-2697 v2 shown below) and press query.

The calculator will then return the list of tested server in the right hand side of the spreadsheet an example of this is shown below.

The SpecInt2006 result for your CPU is highlighted in Orange in the “Result” column.

At this stage the drop box in Step 4 allows you to choose the number of physical cores planned to be used and it will then return the average result of all tested servers.

The above result for example assumes a Dual Socket physical server with 12 core Intel E5-2697 v2 processors.

As we are discussing Virtualizing Exchange, Step 7 is applicable.

Here the tool allows you to enter the overcommitment (vCPU to Physical Core) and the number of vCPUs (called virtual processors in the spreadsheet) which then results in what the spreadsheet calls

“Virtual mailbox server SPECint2006 Rate Value” shown below in Orange.

The calculator makes the assumption that CPU overcommitment of 2:1 degrades performance by 50% which is not strictly true, but can be used as general guidance that high levels of CPU overcommitment which may lead to CPU contention are not recommended for MS Exchange deployments. It is important to note, CPU overcommitment ≠ CPU contention, although the higher the overcommitment, the higher the possibility of contention (CPU Ready).

Now that we have the SPECint2006 Rate Value, this can be entered into the Primary and Secondary (if applicable) field of the Exchange 2013 Server Role Requirements Calculator (shown below).

The SPECInt2006 value is for the physical processor, which if it supports Hyper-threading (HT), means the rating includes the performance benefit of HT. The key point here is using just physical cores for sizing means your VM will not get the full performance of the SPECint2006 rating, it will be slightly less. This will be discussed in more detail in Part 2 – vCPU configurations.

The “Processor Cores / Server” field should be populated by the physical cores intended to be used.

While the “Processor Cores / Server” value does not impact the CPU utilization calculations, entering the “Processor Cores / Server” allows the calculator to report the number Processor Cores Utilized as shown below from the “Role Requirements” tab.

The number of cores utilized helps calculate the number of vCPUs required for the Exchange VM. If the “Server CPU utilization” is much lower than 80% (recommended maximum), the “SPECInt2006 rate value” and “Processor Cores / Server” can be reduced.

Example: If the calculator reports Server CPU Utilization at 40% and the CPU Type is Intel E5-2697 v2 with 12 physical cores with a SPECint2006 rating of 479. The Virtual Machine should be sized with 6 vCPU. To confirm this the SPECint2006 rating for Primary and Secondary (if applicable) field of the Exchange 2013 Server Role Requirements Calculator can be reduced by 50% (from 479) too 239.5 which will result in the calculator reporting Server CPU Utilization at 80%.

Another option is to review the number of Mailbox Servers are configured, and where the utilization is low as in the previous example 40%, you could choose to “scale up” each of the Exchange VMs. To do this, change the highlighted field on the “Input” tab of the calculator to 4, and you will see the utilization under “Server configuration” increase (on the role requirements tab) to 80%.

Scaling up reduces the number of Windows/Exchange instances licenses and ongoing maintenance (such as patching) required, but also increases the failure domain and impact of a failure so this decision needs to not only be a architectural/technical one, but a business decision.

As a general rule, I recommend customers scale out until they have 4 or more Exchange VMs (across 4 or more ESXi hosts), then scale up (and out) as required. This ensures the impact of a server failure is 25%, compared to 50% if it was a scaled up Exchange server deployment with only 2 VMs.

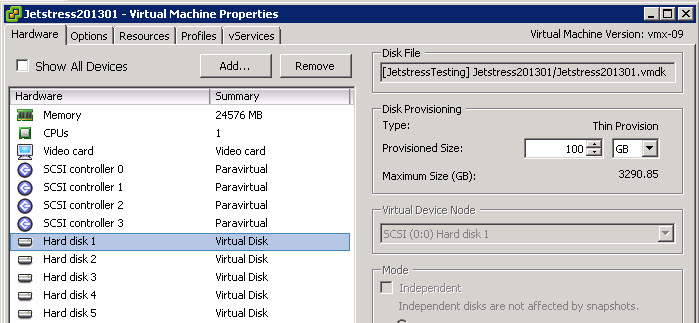

An important consideration for any business critical application deployment is the scalability of the solution. In this case, when discussing virtualizing one or more Exchange servers, the Virtual Machine maximums are critical.

The below shows the maximum vCPUs supported for a VMware based virtual machine.

vSphere Virtual Machine CPU Maximums

Maximum vCPUs: 64 (vSphere 5.1 or later)

Maximum vCPUs: 32 (vSphere 5.0)

Maximum vCPUs: 8 (vSphere 4.1)

The above numbers are dependant on the physical hardware chosen.

Recommendations for CPU sizing:

1. CPU overcommitment be less than 2:1, and ideally 1:1 for hosts servicing Exchange workloads. This will be discussed further in this series.

Use the vSphere Cluster Sizing Calculator to confirm overcommitment ratios for your cluster or to validate your design.

2. Size Exchange Server VMs to less than 80% CPU Utilization

This allows for burst activity such as increased load or DAG failovers.

3. Scale up a single Exchange VM per ESXi host as opposed to running multiple smaller Exchange VMs per host.

4. Do not oversize Exchange VMs Day 1, Size for Day 1 demand and scale vCPUs as required (which can be done quickly and easily thanks to the virtual layer).

5. CPU reservations do not solve CPU scheduling contention (a.k.a CPU Ready). CPU reservations should not be required in properly sized environments.

6. Size Exchange VMs using Physical Cores and assume no benefit from HT

7. Leave HT turned on at the ESXi layer

Back to the Index of How to successfully Virtualize MS Exchange.