At a meeting recently, a potential customer who is comparing NAS/Hyper-converged solutions for an upcoming project advised me they only wanted to consider platforms with VAAI-NAS support.

As the customer was considering a wide range of workloads, including VDI and server the requirement for VAAI-NAS makes sense.

Then the customer advised us they are comparing 4 different Hyper-Converged platforms and a range of traditional NAS solutions. The customer eliminated two platforms due to no VAAI support at all (!) but then said Nutanix and one other vendor both had VAAI-NAS support so this was not a differentiator.

Having personally completed the VAAI-NAS certification for Nutanix, I was curious what other vendor had full VAAI-NAS support, as it was (and remains) my understanding Nutanix is the only Hyper-converged vendor who has passed the full suite of certification tests.

The customer advised who the other vendor was, so we checked the HCL together and sure enough, that vendor only supported a subset of VAAI-NAS capabilities even though the sales reps and marketing material all claim full VAAI-NAS support.

The customer was more than a little surprised that VAAI-NAS certification does not require all capabilities to be supported.

Any storage vendor wanting its customers to get support for VAAI-NAS with VMware is required to complete a certification process which includes a comprehensive set of tests. There are a total of 66 tests for VAAI-NAS vSphere 5.5 certification which are required to be completed to gain the full VAAI-NAS certification.

However as this customer learned, it is possible and indeed common for storage vendors not to pass all tests and gain certification for only a subset of VAAI-NAS capabilities.

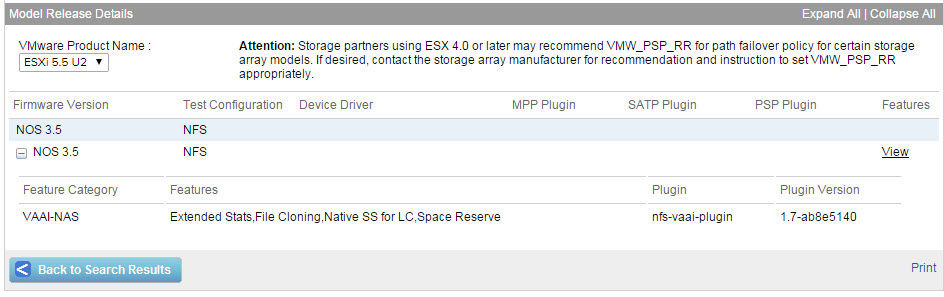

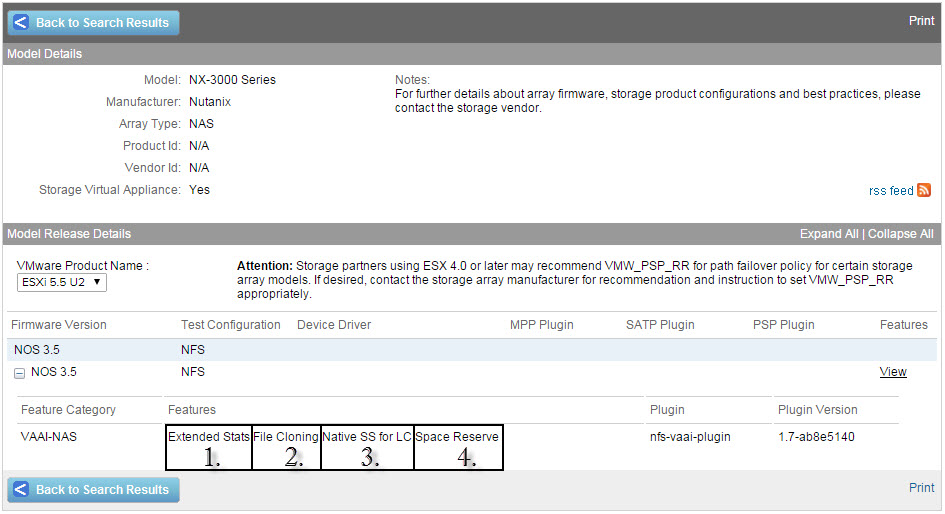

The below shows the Nutanix listing on the VMware HCL for VAAI NAS highlighting the 4 VAAI-NAS features which can be certified and supported being:

1. Extended Stats

2. File Cloning

3. Native SS for LC

4. Space Reserve

This is an example of a fully certified solution supporting all VAAI-NAS features.

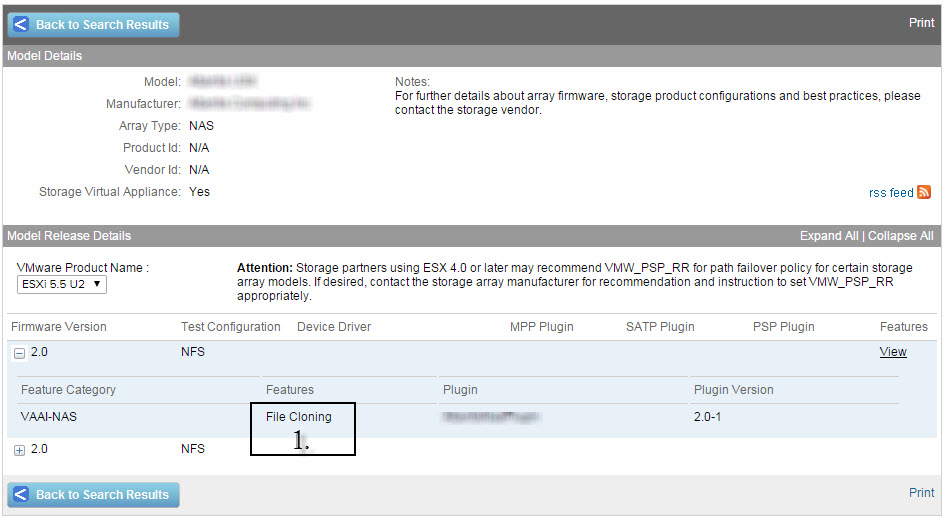

Here is an example of a VAAI-NAS certified solution which has only certified 1 of the 4 capabilities. (This is a Hyper-converged platform although they were not being considered by the customer)

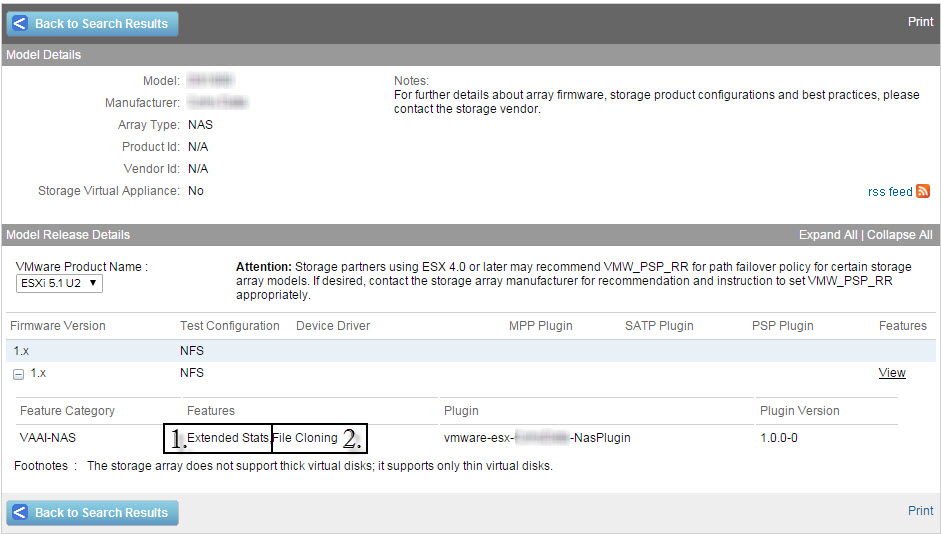

Here is another example of a VAAI-NAS certified solution which has only certified 2 of the 4 capabilities. (This is a Hyper-converged platform).

So customers using the above storage solution cannot for example create Thick Provisioned Virtual Disks, therefore preventing the use of Fault Tolerance (FT) or virtualization of business critical applications such as Oracle RAC.

In this next example, the vendor has certified 3 out of 4 capabilities and is not certified for Native SS for LC. (This is a traditional centralized NAS platform).

So this solution does not support using storage level snapshots for the creation of Linked Clones, so things like Horizon View (VDI) or vCloud Director FAST Provisioning deployments will not get the cloning performance or optimal capacity saving benefits of fully certified/supported VAAI-NAS storage solutions.

The point of this article is simply to raise awareness that not all solutions advertising VAAI-NAS support are created equal and ALWAYS CHECK THE HCL! Don’t believe the friendly sales rep as they may be misleading you or flat out lying about VAAI-NAS capabilities / support.

When comparing traditional NAS or Hyper-converged solutions, ensure you check the VMware HCL and compare the various VAAI-NAS capabilities supported as some vendors have certified only a subset of the VAAI-NAS capabilities.

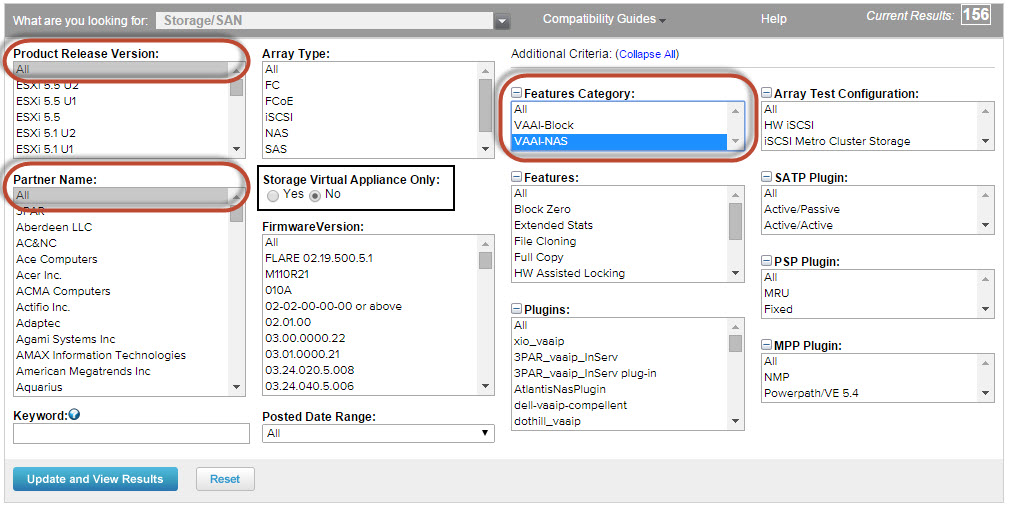

To properly compare solutions, use the VMware HCL Storage/SAN section and as per the below image select:

Product Release Version: All

Partner Name: All or the specific vendor you wish to compare

Features Category: VAAI-NAS

Storage Virtual Appliance Only: No for SAN/NAS , Yes for Hyperconverged or VSA solutions

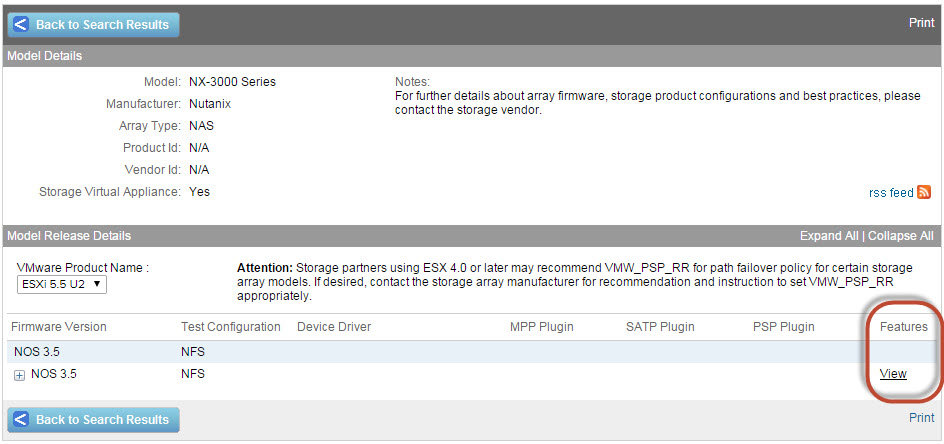

Then click on the Model you wish to compare e.g.: NX-3000 Series

Then you should see something similar to the below:

Click the “View” link to show the VAAI-NAS capabilities and you will see the below which highlights the VAAI-NAS features supported.

Note: if the “View” link does not appear, the product is NOT supported for VAAI-NAS.

If the Features do not list Extended Stats, File Cloning, Native SS for LC & Space Reserve the solution does not support the full VAAI-NAS capabilities.

Related Articles:

1. My checkbox is bigger than your checkbox – @HansDeLeenheer