Recently I was asked to review some performance testing done by an external party and my initial impression was the performance was well below what I expected.

So over the weekend I setup a block in my lab to reproduce the tests to see if the results were firstly repeatable, and if so, what performance would I get with and without tuning.

The only significant difference between my hardware and the hardware used by the 3rd party was that I used old dual socket Ivy Bridge E5-2670 2.6Ghz 8c processors and the 3rd party had a much newer dual Broadwell E5-2640 v4 2.4Ghz processors.

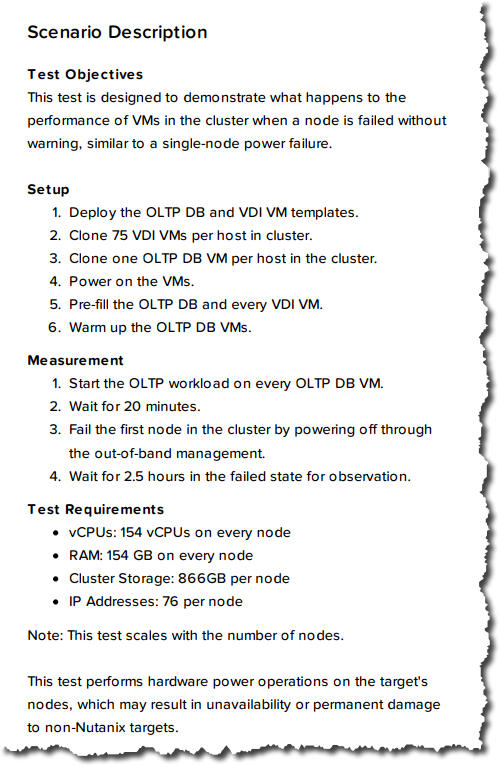

If we compare the two processors using CPUBoss.com we see the following:

Not surprisingly the Broadwell E5-2640 v4 processor is faster, but possibly less than you would expect with a 16.28% better PassMark per core, and in my opinion, the per core value quite importaint especially when considering business critical applications.

None the less, a 16.28% performance improvement per core will be a significant factor for a benchmark with Nutanix as the Controller VM (CVM) is powered by the CPU of the host.

I thought I would whip up a quick post about performance benchmarking to show how different performance results can be on the same hardware depending on just a few factors and why storage benchmarking, especially competitive benchmarking, cannot and should not be trusted when making purchasing decisions.

This test was for a 10k user MS Exchange deployment and the hardware used for testing was performed on in both cases was 1 x 1.92TB SSD and 3 x 4TB SATA drives and they were both tested on the same GA Nutanix AOS build.

The required (or Target) IOPS was just 216 per MS Exchange instance (VM) as shown below by the Jetstress report.

This target is calculated by Jetstress when using the “Exchange Mailbox Profile” test scenario with the following configuration:

The resulting SSD vs SATA ratio makes this test largely about the limitations of SATA performance as >87% of data is being read from the SATA tier.

Test 1: The Jetstress dataset was created and then the performance test was immediately ran for 2hrs with no pre-warming of the metadata or read cache.

Achieved Transactional I/O: 200.663

Avg Log Write Latency: 1.06ms

Avg DB Write Latency: 1.4ms

Avg DB Read Latency: 14ms

This result was 15.61% lower than the 3rd parties result and interestingly if we correct for CPU core performance, it’s less than 1% difference. As this was in line with my expectation knowing the importance of CPU clock speed, I would say for this testing that the baseline results were comparable.

Test 2: The Nutanix tiering was tuned to suit large working sets (which vastly exceed the SSD tier) and the Jetstress dataset was created and the performance test was immediately ran for 2hrs again with no pre-warming of the metadata or read cache.

Before we get to the results, I want to point out that Jetstress is in some ways is very good but in other ways a very unrealistic benchmarking tool as the entire dataset is “active” which is not the case in the real world. However, in one way this is a good thing because a passing Jetstress result in my experience means the production deployment performs very well from a storage perspective especially when using tiered storage which is built around the assumption not all data is active. As a result, a Jetstress test could be considered a “worse case scenario” style test for intelligent tiered storage.

Achieved Transactional I/O: 249.623

Avg Log Write Latency: 0.99ms

Avg DB Write Latency: 1.5ms

Avg DB Read Latency: 12ms

Test 3: I then setup Jetstress as per Nutanix MS Exchange best practices and ran the test again with no pre-warming of the metadata or read cache.

Achieved Transactional I/O: 389.753

Avg Log Write Latency: 0.95ms

Avg DB Write Latency: 2.0ms

Avg DB Read Latency: 17ms

Test 4: I then lowered the Jetstress thread count to the lowest value (roughly 33% lower) which I estimated would achieve the target IOPS (this is to simulate real world requirements) and ran the test again with no pre-warming of the metadata or read cache.

Achieved Transactional I/O: 300.254

Avg Log Write Latency: 0.94ms

Avg DB Write Latency: 1.5ms

Avg DB Read Latency: 12ms

Note: Test 4 achieved the highest I/O per thread.

Test 5: The same configuration as Test 4 but with pre-warming of the metadata cache.

Achieved Transactional I/O: 334

Avg Log Write Latency: 0.98ms

Avg DB Write Latency: 1.9ms

Avg DB Read Latency: 12.4ms

Some of you might be asking, how did test 4 achieve higher transactional I/O and with lower read and write latency than Test 1 & 2 with less threads. Shouldn’t a higher thread count achieve higher IOPS?

The reasons is because the original thread count was pushing the SATA drives past their capabilities, leading to excessive latency. Lowering the thread count allowed the SATA drives to operate at somewhere around their most efficiency range leading to lower latency.

Test 6: The same configuration as Test 5 but with tuned extent cache (RAM read cache) and 100% medadata cached.

Achieved Transactional I/O: 362.729ms

Avg Log Write Latency: 0.92ms

Avg DB Write Latency: 1.7ms

Avg DB Read Latency: 12ms

As we can see from Test 1 through to Test 6, the performance differs by up to 81% depending on how the platform is configured.

Side note and future looking statement. Many of the optimisations I performed above wont be required for long as many of the areas these optimisations help improve are being addressed in upcoming code. In saying that, for a business critical application like Exchange, I don’t think it’s a problem doing some optimisation as long as 90% of the workloads run well by default and we’re only tuning for the 10% (vBCA) workloads.

But out of interest, what would happen if we enabled data reduction? How much of a performance hit would that take?

Test 7: The same configuration as Test 6 but with In-line compression enabled.

Achieved Transactional I/O: 751.275

Avg Log Write Latency: 0.97ms

Avg DB Write Latency: 3.4ms

Avg DB Read Latency: 5.9ms

That’s a 107.46% increase in transactional IO and with in-line compression! Log write latency remained sub ms and read latency has almost halved.

Note: As Jetstress data is highly compressible, (Nutanix achieves 8:1 or higher with non default settings), I tuned the compression slice size to give a more realistic data reduction ratio. The ratio for this test was 3.99:1 and the ratio of SSD to SATA was almost exactly 50% as shown below.

Why did performance improve so much with In-Line compression? Well there is two main reasons:

- More data is being served from the SSD tier as compression allows more effective SSD tier capacity.

- Reads from SATA are faster as less physical data needs to be read to service an I/O due to it being compressed. The higher the compression ratio, the more this can improve.

As we can see, the results varied significantly and had I wanted to optimise the test further, I could have achieved even higher performance but there was no need. The requirements for the solution were already achieved and in the case of Test 7, the requirements were exceeded by 247% meaning the solution had heaps of headroom.

Nutanix best practice is to enable In-line compression for MS Exchange and other databases such as Oracle and SQL as per my tweet below.

This testing was performed on Nutanix Acropolis Hypervisor (AHV) but was not using the upcoming Turbo mode, which will further improve performance and lower overheads.

This is a key point many people forget when benchmarking. If we assume the platforms in question are scalable (e.g.: Like Nutanix), it doesn’t matter if one platform does 100k IOPS and another does 200k IOPS if your requirements are 20k IOPS. Both platforms capabilities vastly exceed the requirement (10k IOPS) from a performance perspective, so performance is not longer a significant factor in your purchasing decision.

Question: Are the above performance results genuine?

All of the above results could be argued to be genuine results, at the same time none of the above represent the best performance that could be achieved, yet the results could be used to try and create FUD if they are improperly represented (which is almost always the case with competitive comparisons whether intentional or otherwise).

Let’s say this was your proof of concept, What should be the take away from benchmarking results like this?

Simple: The solution meets/exceeds your performance requirements.

Now for the point of this article: The truth about storage benchmarking is that there are so many variables that can affect the results that unless you’re truely experienced in benchmarking your applications AND an expert in the platforms you’re benchmarking, your results are unlikely to be indicative of the platforms capabilities and therefore of very little value.

If you’re benchmarking Vendor A vs Vendor B, it’s a waste of time doing “Like for Like” benchmarking because the Virtual machine and application settings which are optimal for one vendor, will likely be different for the other vendor. e.g.: SAN vs HCI.

On the other hand, a more valid test would be vendor A’s best practices vs vendor B best practices, but again if one vendor Jetstress achieves 500 and the other achieves 400, that 20% higher performance is all but irrelevant if your requirements are say, 216 like in this case.

A very good example of invalid “like for like” benchmarking would be to size the active working set (i.e.: The capacity of the data you plan to benchmark against) to fit within the cache/SSD tier of one platform, but exceed the cache/SSD capacity of the other platform. The results will be vastly different and will not be indicative of real world performance. This is what vendors do when competitive benchmarking and it’s likely one of the main reasons we see End User License Agreements (EULA) from most if not all storage vendors preventing publishing benchmark results without written agreement.

So the (unpopular) truth about storage benchmarking is it’s not as easy and building a VM and running I/O meter with the same profile on multiple system like some vendors and even 3rd party storage analysts would have you believe. The vast majority of people (customers, analysts and even vendors) doing benchmarking don’t have the skill/experience to produce repeatable or meaningful results, especially on multiple platforms.

In fact it’s unrealistic/unreasonable to expect a person (customer, vendor, consultant) to be an expert in multiple platforms, and very few people are!

Related Articles:

- Peak Performance vs Real World Performance

- The Key to performance is Consistency