Nutanix provides excellent performance monitoring and analytic capabilities through our HTML 5 based PRISM UI, but what if you want to delve deeper into the performance of a specific business critical application?

Nutanix also provides advanced storage performance monitoring and workload profiling through port 2009 on any CVM which shows very granular details for Virtual disks.

By default, Nutanix secures our CVM and the http://CVM_IP:2009 page is not accessible, but for advanced troubleshooting this can be enabled by using the following command.

sudo iptables -t filter -A WORLDLIST -p tcp -m tcp –dport 2009 -j ACCEPT

When accessing the 2009 page (which is part of the Nutanix process called “Stargate”) you will see things like Extent (In Memory Read) cache usages and hits as well as much more.

On the main 2009 page you will see a section called “Hosted VDisks” (shown below) which shows all the current VDisks (equivalent of a VMDK in ESXi) which are currently running on that node.

The Hosted VDisks shows high level details about the VDisk such as Outstanding Operations, capacity usage, Read/Write breakdown and how much data is in the OpLog (Persistent Write Cache).

If you need more information, you can click on the “VDisk Id” and you will get to a page titled “VDisk XXXXX Stats” where the XXXXX is the VDisk ID.

The below is some of the information which can be discovered in the VDisk Stats Page.

VDisk Working Set Size (WWS)

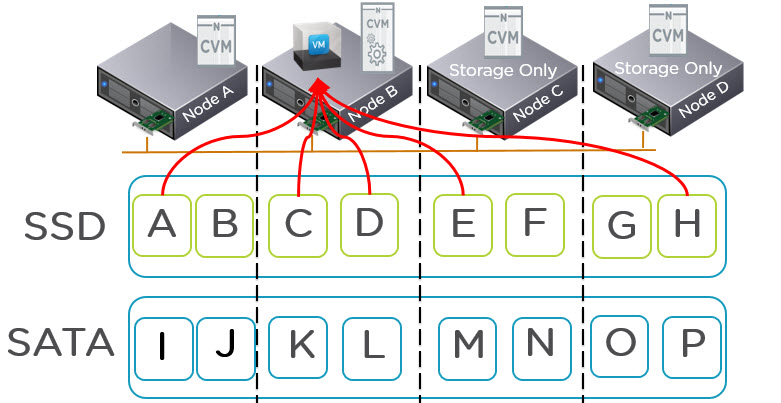

The working set size can be thought of as the data which you would ideally want to fit within the SSD tier of a Nutanix node, which would result in all-flash type performance.

In the below example, in the last 2mins, the VDisk had a combined (or Union) working set of 6.208GB and over the last 1hr over 111GB.

VDisk Read Source

The Read Source is simply what tier of storage is servicing the VDisks IO requests. In the below example, 41% was from Extent Cache (In Memory), 7% was from the SSD Extent Store and 52% was from the SATA Extent Store.

In the above example, this was an Exchange 2013 workload where the total dataset was approx 5x the size of the SSD tier. The important point here is its not always possible to have all data in the SSD tier, but its critical to ensure consistent performance. If 90% was being served from SATA and performance was not acceptable, you could use this information to select a better node to migrate (vMotion) the VM too, or help choose to purchase a new node.

VDisk Write Destination

The Write Destination is fairly self explanatory, if its Oplog it means its Random IO and its being written to SSD, if its straight to the extent store (SSD) it means the IO is either sequential, OR in rare cases the OpLog is being bypassed if the SSD tier reached 95% full (which is generally prevented by Nutanix ILM tiering process).

VDisk Write Size Distribution

The Write Size Distribution is key to determining things like the Windows Allocation Size when formatting drives as well as understanding the workload.

VDisk Read Size Distribution

The Read Size Distribution is similar to Write Size in that its key to determining things like the Windows Allocation Size when formatting drives as well as understanding the workload. In this case, a 64k allocation size would be ideal as both the Write (shown above) and the Read (below) are >32K and <64K 86% of the time. (Which is expected as this was an Exchange 2013 workload).

VDisk Write Latency

The Write Latency shows the percentage of Write I/O which are serviced within the latency ranges shown. In this case, 52% of writes are sub-millisecond. It also shows for this vDisk 1% of IO being outliers being served between 5-10ms. This is something that outside of a lab, if the outliers were a significant percentage that could be investigated to ensure the VM disk configuration (e.g.: PVSCSI and number of VMDKs) is optimal.

VDisk Ops and Randomness

Here we see the number of IOPS, the Read/Write split, MB/s and the split between Random and Sequential.

Summary

For any enterprise grade storage solution, it is important that performance monitoring be easy as it is with Nutanix via PRISM UI, but also to be able to quickly and easily dive deep into very granular details about a specific VM or VDisk. The above shows just a glimpse of the information which is tracked by default for all VDisks allowing customers , partners and Nutanix support to quickly and easily monitor & profile workloads.

Importantly these capabilities are hypervisor agnostic giving customers the same capabilities no matter what choice/s they make.

Related Posts:

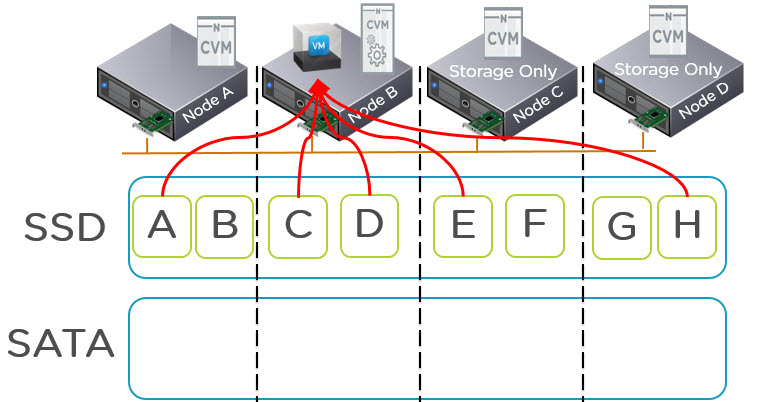

1. Scaling Hyper-converged solutions – Compute only.

2. Acropolis Hypervisor (AHV) I/O Failover & Load Balancing

3. Advanced Storage Performance Monitoring with Nutanix

4. Nutanix – Improving Resiliency of Large Clusters with Erasure Coding (EC-X)

5. Nutanix – Erasure Coding (EC-X) Deep Dive

6. Acropolis: VM High Availability (HA)

7. Acropolis: Scalability

8. NOS & Hypervisor Upgrade Resiliency in PRISM