Problem Statement

In a VMware vSphere environment, with future releases of ESXi disabling Transparent Page Sharing by default, what is the most suitable TPS configuration for a Virtual Desktop environment?

Assumptions

1. TPS is disabled by default

2. Storage is expensive

3. Two Socket ESXi Hosts have been chosen to align with a scale out methodology.

4. Average VDI user is Task Worker with 1vCPU and 2GB Ram.

5. Memory is the first compute level constraint.

6. HA Admission Control policy used is “Percentage of Cluster Resources reserved for HA”

7. vSphere 5.5 or earlier

Requirements

1. VDI environment costs must be minimized

Motivation

1. Reduce complexity where possible.

2. Maximize the efficiency of the infrastructure

Architectural Decision

Enable TPS and disable Large Memory pages

Justification

1. Disabling Large pages is essential to maximizing the benefits of TPS

2. Not disabling large pages would likely result in minimal TPS savings

3. With Kiosk and Task worker VDI profiles, the percentage of memory which is likely to be shared is higher than for Power users.

4. Existing shared storage has plenty of spare Tier 1 capacity to vSwap files

Implications

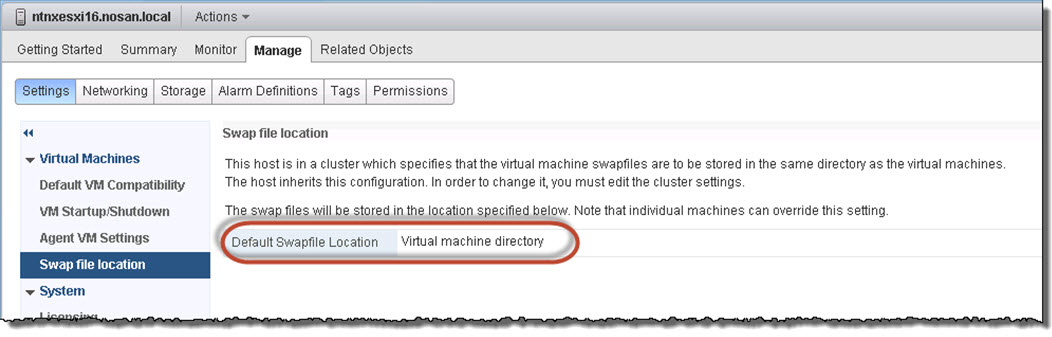

1. Sufficient capacity for VM swap files must be catered for.

2. VDI & Storage performance may be impacted significantly in the event of memory contention.

3. Decreased memory costs may result in increased storage costs.

4. During patching, and operational verification that non default settings have not been reverted by the patching of ESXi.

5. Additional CPU overhead on ESXi from enabling TPS.

6. HA admission control will calculate fail-over requirements (when using Percentage of cluster resources reserved for HA) so that performance will be approximately the same in the event of a fail-over due to reserving the full RAM reserved for every VM,

6. HA admission control (when configured to Percentage of Cluster resources reserved for HA) will only calculate fail-over capacity based on 0MB + VM overhead for each VM which can lead to significantly degraded performance in a HA event.

7. Higher core count (and higher cost) CPUs may be desired to drive overcommitment ratios as RAM will be less likely to be a point of contention.

Alternatives

1. Use 100% memory reservation and leave TPS disabled (default)

2. Use 50% memory reservation and Enable TPS and disable large pages

Related Articles:

1. The Impact of Transparent Page Sharing (TPS) being disabled by default @josh_odgers (VCDX#90)

2. Example Architectural Decision – Transparent Page Sharing (TPS) Configuration for VDI (1 of 2)

3. Future direction of disabling TPS by default and its impact on capacity planning –@FrankDenneman (VCDX #29)

4. Transparent Page Sharing Vulnerable, Yet Largely Irrelevant – @ChrisWahl(VCDX#104)