As I have previously discussed, AHV is the next generation hypervisor and brings similar value as traditional hypervisors with much improved management performance/resiliency while being easier to deploy and scale.

However one of the weak points of AHV was when it came to visualisation and configuration of the virtual networking (Open vSwitch) from a node perspective.

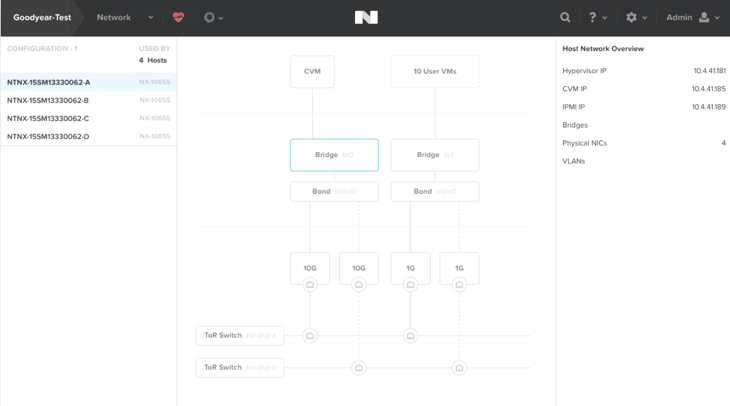

I am pleased to say in an upcoming release of AHV the configuration of virtual networking is integrated into PRISM Element.

The below screenshot shows an example of the Nutanix Controller VM (CVM) and User VMs (UVMs) connected to the underlying Bridges/Bonds which connect the virtual machines to the physical networking adapters.

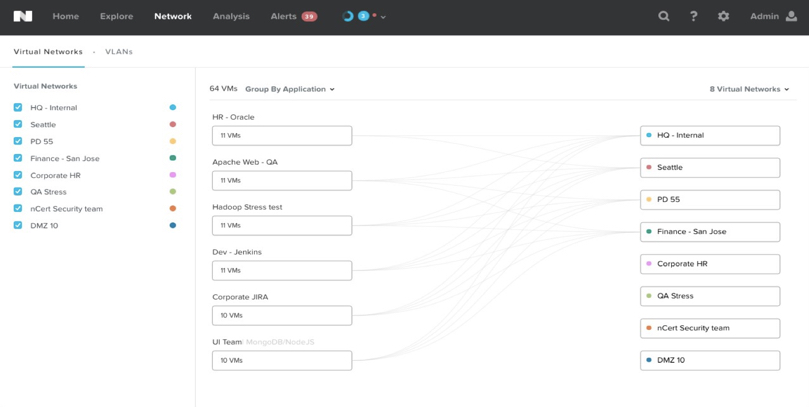

Next we can see a visualisation of grouped applications (groups of VMs) and which virtual networks they are connected to.

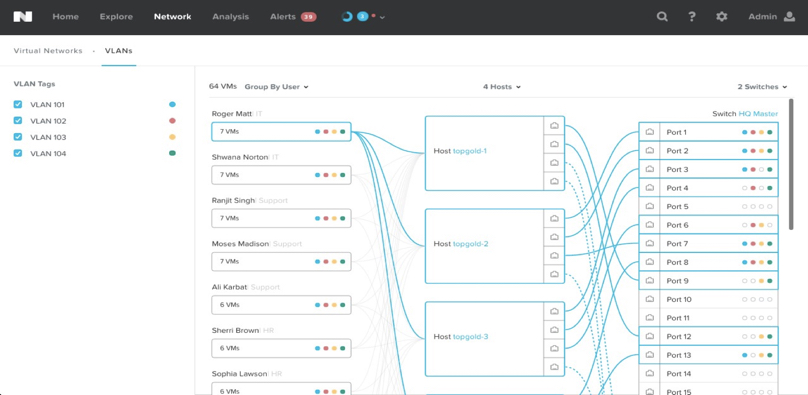

Next we can see an end to end visualisation of Virtual machines grouped in this example by User, on the AHV host through to the physical network switches and ports.

Stay tuned for upcoming posts with YouTube videos showing how virtual networking is configured and monitored for different use cases.

Related .NEXT 2016 Posts

- What’s .NEXT 2016 – All Flash Everywhere!

- What’s .NEXT 2016 – Acropolis File Services

- What’s .NEXT 2016 – Acropolis X-Fit

- What’s .NEXT 2016 – Any node can be storage only

- What’s .NEXT 2016 – Metro Availability Witness

- What’s .NEXT 2016 – PRISM integrated Network configuration for AHV

- What’s .NEXT 2016 – Enhanced & Adaptive Compression

- What’s .NEXT 2016 – Acropolis Block Services

- What’s .NEXT 2016 – Self Service Restore