There is a lot of misinformation being spread in the HCI space about Nutanix data protection capabilities. One such example (below) was published recently on InfoStore.

Evaluating Data Protection for Hyperconverged Infrastructure

When I see articles like this, It really makes me wonder about the accuracy of content on these type of website as it seems articles are published without so much as a brief fact check from InfoStore.

None the less, I am writing this post to confirm what Data Protection Capabilities Nutanix provides.

- Native In-Built Data protection

Prior to my joining Nutanix in mid-2013, Nutanix already provided a Hypervisor agnostic Integrated backup and disaster recovery solution with centralised consumer- grade management through our PRISM GUI which is HTML 5 based.

The built in capabilties are flexible and VM-centric policies to protect virtualized applications with different RPOs and RTOs with or without application consistency.

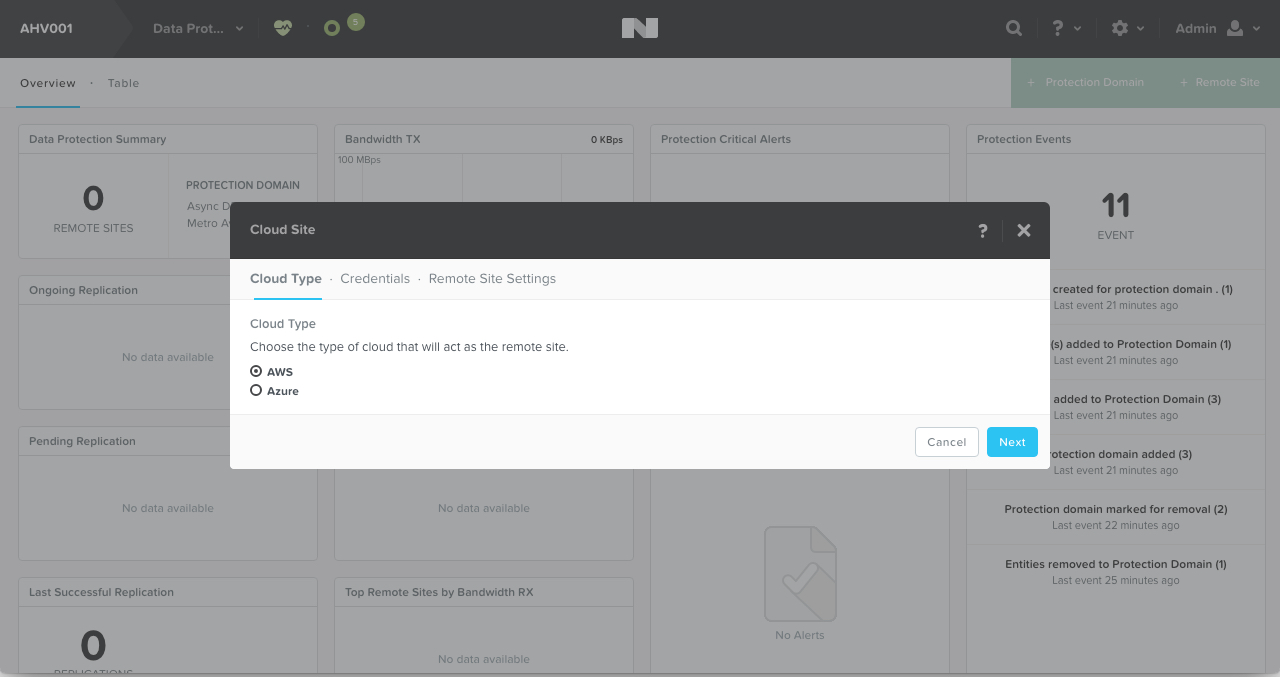

The solution also supports Local, remote, and cloud-based backups, and synchronous and asynchronous replication-based disaster recovery solutions.

Currently supported cloud targets include AWS and Azure as shown below.

The below video which shows in real time how to create Application consistent snapshots from the Nutanix PRISM GUI.

Nutanix can also perform One to One, One to Many and Many to One replication of application consistent snapshots to onsite or offsite Nutanix clusters as well as Cloud providers (AWS/Azure), ensuring choice and flexibility for customers.

Nutanix native data protection can also replicate between and recover VMs to clusters of different hypervisors.

- CommVault Intellisnap Integration

Nutanix also provides integration with Commvault Intellisnap which allows existing Commvault customers to continue leveraging their investment in the market leading data protection product and to take advantage of other features where required.

The below shows how agentless backups of Virtual Machines is supported with Acropolis Hypervisor (AHV). Note: Commvault is also fully supported with Hyper-V and ESXi.

By Commvault directly calling the Nutanix Distributed Storage Fabric (NDSF) it ensures snapshots are taken quickly and efficiently without the dependancy on a hypervisor.

- Hypervisor specific support such as VMware API Data Protection (VADP)

Nutanix also supports solutions which leverage VADP, allowing customers with existing investment in products such as Veeam & Netbackup to continue with their existing strategy until such time as they want to migrate to Nutanix native data protection or solutions such as Commvault.

- In-Guest Agents

Nutanix supports the use of In-Guest agents which are typically very inefficient with centralised SAN/NAS storage but due to data locality and NDSF being a truly distributed platform, In-Guest Incremental forever backups perform extremely well on Nutanix as the traditional choke points such as Network, Storage Controllers & RAID packs have been eliminated.

Summary:

As one size does not fit all in the world of I.T, Nutanix provides customers choice to meet a wide range of market segments and requirements with strong native data protection capabilities as well as 3rd party integration.