A little while ago I wrote a post titled “Splitting SQL datafiles across multiple VMDKs for optimal VM performance” where I talked about how SQL databases can be split with minimal/no interruption to production to give better performance by spreading the IO load across multiple PVSCSI adapters and virtual machine disks (VMDKs).

In a follow up post titled “SQL & Exchange performance in a Virtual Machine” I mentioned the above article and concluded:

If the DBA is not confident doing this, you can also just add multiple virtual disks (connected via multiple PVSCSI controllers) and create a stripe in guest (via Disk Manager) and this will also give you the benefit of multiple vdisks.

Both posts have been very popular and one of the comments I got via twitter was that creating striped or spanned NTFS volumes in guest was not supported by VMware when using PVSCSI.

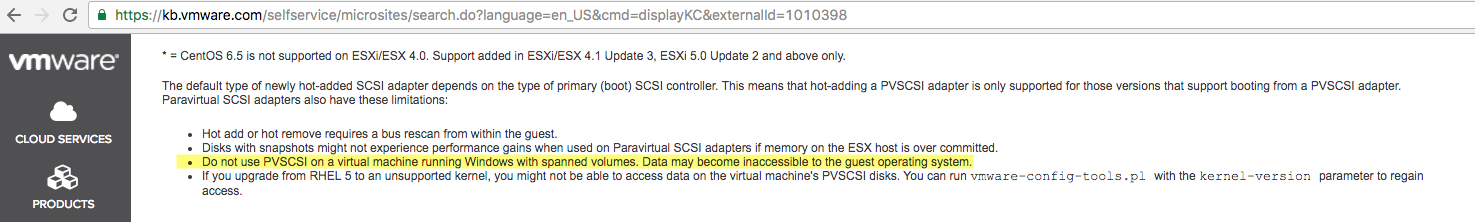

This is stated in VMware KB “Configuring disks to use VMware Paravirtual SCSI (PVSCSI) adapters (1010398)” as shown below:

Prior to writing both posts I was aware of this KB, but after comprehensively testing this numerous times on different platforms over the years, and more recently on Nutanix, I concluded after liaising with many VMware experts (including several VCDXs) that this was either a legacy recommendation which needed to be updated, or simply a mistake by the author of the KB (which can happen as we’re all human).

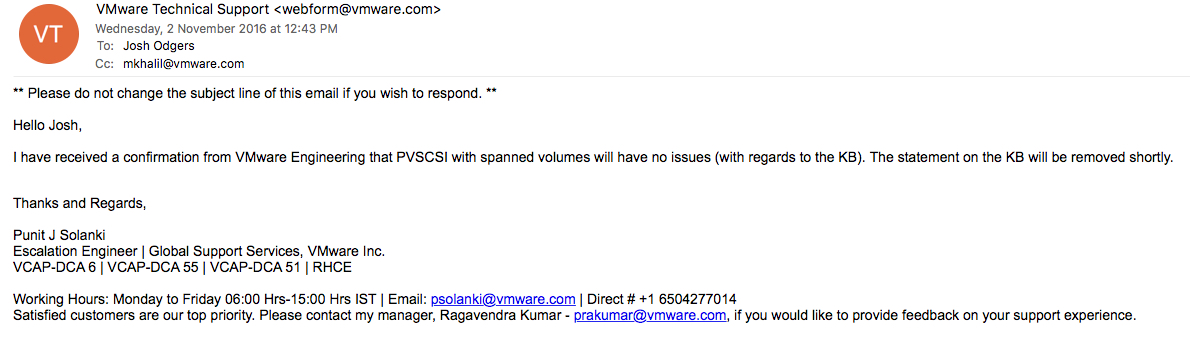

As such, I followed up with VMware by raising a SR on August 14th 2016.

After following up several times I had given up waiting for an answer but I am pleased to say today (2nd November 2016) I finally got a reply.

In summary, spanned (and stripped volumes which was not mentioned in the KB) are supported and to quote VMware GSS “will have no issues”.

One strong recommendation I have is DO NOT use VMDKs hosted in different failure domains (e.g.: LUNs, SAN/NASs) in the one spanned/striped volume as this increases the size of the failure domain and your chances of the volume going offline.

So there you have it, if you need to increase the performance for an application and you are not confident to split databases at the application level, you can (typically) get increased IO performance by using striped volumes in guest which are quick and easy to setup. The only downside is you will need to take your DB offline to copy it to the new volume before bringing it back online.

Hope this puts peoples mind at ease about striped volumes with PVSCSI.