Calculating the usable capacity for your next SAN/NAS is easy. Work out the number of drives you have, what RAID config your going to use and your done, right?!

Wrong! There are numerous factors which come into play to understand the ACTUAL or TRUE usable capacity of a SAN/NAS solution.

So let’s take an example of a traditional SAN/NAS using RAID and work out how much space we can actually use.

Note this is a simplified and generic example, which will vary from vendor to vendor.

Let’s say a SAN/NAS has 100 x 1TB drives (Note: The type of drive is not important for this example) and has the requirement to support mixed workloads such as MS SQL , MS Exchange and general server workloads.

As per vendor best practices, RAID 10 is used to maximize IOPS for SQL / Oracle and other storage intensive applications, RAID 5 is used for things like MS Exchange and RAID 6 (or DP) is used for general server workloads.

The vendor also recommends one hot spare drive per 2 disk shelves to ensure when drives fail, there are sufficient hot spares available.

So let’s start with 100TB RAW and see where things end up.

1. Deducting hot spare drives

So assuming 14 drives per shelf, that’s 7 drives (or 7TB RAW) dedicated to hot spares.

100TB – 7TB = 93TB

2. RAID Overhead

Let’s assume 20% of our workloads require RAID 10, so 20 drives are used. RAID 10 has a usable capacity of 50% so 20TB – 50% = 10TB

Next let’s say 40% of our workloads use RAID 5, so 40 drives broken up into 5 x RAID 5s each with 8 drives in a 7+1 Parity configuration. Therefore with 5 x RAID 5s volumes we loose 5 drives (5TB RAW) worth of capacity.

The final 40% of our workloads use RAID6 (or DP), so 40 drives broken up into 5 x RAID 6s each with 8 drives in a 6+2 Parity configuration therefore with 5 x RAID 6s we loose 10 drives (10TB RAW) worth of capacity.

93TB – 10TB (RAID 10) – 5TB (RAID5) – 10TB (RAID6) = 68TB remaining

3. Free Space on the platform required to ensure performance

For most traditional storage solutions, the vendors recommend ensuring a specific percentage of free space to ensure performance remains consistent.

For some vendors this is 20% and others say around 30%.

For this example, I will assume best case scenario of 20%.

68TB – 20% (Free space for performance) = 54.4TB

4. Free space per LUN

Vendors typically recommend having between 10-20% free space per LUN to account for unexpected growth, VM level snapshots etc. This makes perfect sense as if a LUN runs out of space, its a bad day for the I.T dept.

For this example, I will assume only 10% free space per LUN but it could easily be 20% further reducing usable capacity.

54.4TB – 10% (Free space per LUN) = 48.96TB

5. Free space per VMDK

As with physical servers, we don’t want our VMs drives running out of capacity, as a result it is common to size VMDKs well above what is strictly required to make capacity management (operational tasks) easier.

I typically see architects recommending upwards of 10-20% free space per VMDK over and above what is required to account for unexpected growth, OS patching etc. This makes perfect sense for the same reason as we have free space per LUN because if space runs out for a VM, it’s another bad day for I.T.

For this example, I will assume only 10% free space per VMDK.

48.96TB – 10% (Free space per VMDK) = 44.064TB

Now where are we at?

So far, the first 5 points are fairly easy to calculate and if you agree or not with the specific examples or percentage deductions, I’d suggest few would disagree these are factors which reduce usable disk space for traditional SAN/NAS deployments.

Next we will look at various factors which further reduce usable capacity. Each of these factors will vary from customer to customer, which further complicates the sizing exersize and results in lower usable capacity than what you may believe.

6. Silos for Performance

In this example, we have assumed only 20% of our drives are configured for high I/O with RAID 10, but in many cases the drives required for performance could be a much higher percentage.

Now to get the IOPS required for these storage intensive applications, its common to see the capacity utilization of the LUNs be much lower than the usable capacity because the storage is IOPS constrained, not capacity.

This leads to Silos of drives with low utilization, where the remaining capacity cannot (or at least should not) be shared with other VMs as this would likely impact the performance of the IO intensive VMs.

So for example, if our RAID 10 LUNs have 50% free space (which I personally have found to be common) then we’re effectively wasting 5TB (50% of the RAID 10s 10TB usable).

44.064TB – 10% (Wasted Capacity for Performance Silos) = 39.65TB

7. Silos of (or Fragmented) Usable Capacity

In this example, we have assumed 40% of our drives are configured for RAID 5 and the remaining 40% for RAID 6 (DP) to suit the different workloads in this environment, as a result we have 2 “Silos” of usable capacity.

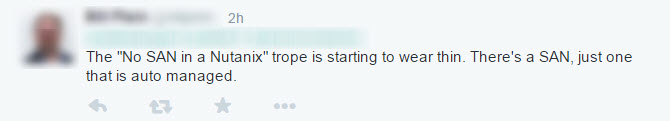

In this post I have described 5 x 8 drive RAID 5s and 5 x 8 Drive RAID 6 volumes. The below diagram is an example of what an environment in this configuration may have with regards to free space per LUN.

So we can see the average free space per LUN is 20%, but it varies from one LUN having only 5% free space and another having 35%.

In this case, when creating a new VM, or adding or expanding VMDKs for existing VMs, we have a situation where we will need to be careful about where we place a new VMDK from a capacity perspective but keeping in mind performance as well.

Now not all VMs or VMDKs are the same size, so if a new VMDK needs to be 500GB even though the environment may have well in excess of 500GB available, the fact that the free space is fragmented across multiple LUNs means we cannot create the new VMDK without first migrating VMs across the LUNs.

Now Storage DRS can do a reasonable job of this, but that takes time and impacts performance (during the Storage vMotion) and depending on the size of the VMs in the environment may not always be able to solve the issue.

Best case scenario, in my experience is at least 10% of capacity is wasted simply because of the fact the drives are carved up into RAID groups and VMs don’t fit within the inflexible LUNs.

39.65TB – 10% (Wasted Capacity due to de-fragmented free space) = 35.68TB

Usable space so far from 100TB RAW is only 35.68TB or approx 1/3rd!

Other factors which reduce usable capacity?

8. LUN Provisioning Type

In many cases, especially when talking about high performance applications, storage vendors recommend using Thick Provisioned LUNs.

As a result limited or no overcommitment can be achieved which reduces the usable capacity due to the thick provisioning.

It’s anyone’s guess how much space is wasted as a result.

Summary:

From the 100TB RAW factoring in what I believe to be realistic configuration of RAID, the impact of free space requirements, thick provisioning and capacity fragmentation we end up with only 35.68TB usable capacity or approx 1/3rd of the RAW.

Now most vendors provide some form of data reduction such as compression/de-duplication, others recommend some thin provisioning and these may increase the effective capacity, but this example shows its not as simple as you think to size for SAN/NAS storage and the overhead of RAID is only one of the many factors which impact the effective usable capacity.

In Part 2, I will run through a similar example for Nutanix usable capacity.

![[activities]Saurus3_414x2](http://www.joshodgers.com/wp-content/uploads/2015/04/activitiesSaurus3_414x2.jpg)