Lets start with a simple example, the below shows a 4 node cluster mixing 2 x NX-3060 nodes with 2 x NX-8035 nodes. Both node types share the same Haswell CPU types but the NX-3060 has ~2TB usable and the NX-8035 has ~8TB usable.

Assuming the cluster capacity was 50% utilized the NDSF layer would look similar to this:

The above shows the NDSF having a total Storage Pool capacity of 20TB with 50% used (10TB). As we have a heterogenous cluster, we have 2 different node types with vastly different usable capacity.

Nutanix Disk Balancing automatically balances the storage to ensure the utilization percentage of all SSDs/HDDs within the cluster are within +-15%. This means administrators do not have to worry about capacity management on a per node basis, capacity management only needs to be performed at the storage pool (cluster) layer.

Advantage 1: No silos of storage capacity is heterogeneous environments

Advantage 2: NDSF disk balancing ensures the data is evenly distributed throughout the cluster

Advantage 3: There is no requirement for hypervisor level storage capacity management such as Storage DRS (SDRS).

For more information on why Storage DRS is not required see: Storage DRS and Nutanix – To use, or not to use, that is the question?

In a heterogeneous environment, it is likely you will have multiple workloads with different capacity and performance requirements. The below diagram shows the same 4 node cluster, with a single storage pool and 4 containers with different data protection and reduction settings to suit a wide range of application requirements.

Note: The RF3 container shown below would only be possible in clusters of 5 nodes or more, but is shown to illustrate the flexibility/capabilities of NDSF.

The storage pool itself has up to 20TB usable (assuming RF2 and excluding data reduction savings). In the Pool we can see four Containers which can be thought of as policies which can be applied to Virtual Machines or Virtual Disks.

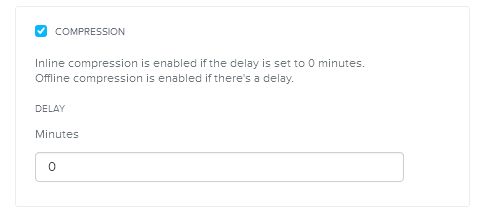

Container01 is configured with RF2 and In-Line compression and reports 10TB free space as the underlying storage pool (where capacity is managed) is 50% utilised. Therefore the Container reports free space as all the available capacity within the Storage Pool based on its configured RF.

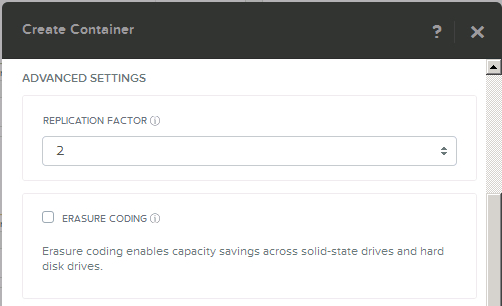

Container02 has RF2, In-Line compression and EC-X enabled but you will note it also reports 10Tb free space, as capacity is not assigned to a container, its shared between all containers within a Storage Pool.

Container03 is configured with a RF3 which is different to Containers 01 and 02, as such the container reports free space based on its configured RF of 3, so it shows 13.3TB usable and 6.66Tb free space as that is the maximum data that can be supported in that container based on its storage policies.

Container04 reports the same free space as Container 01 and 02, as its configured with the same RF. While Container04 has all data reduction technologies enabled, the Container reports actual free space, as data reduction takes effect the usable capacity will change.

It is possible to set capacity reservations on Containers where an application or tenant requires a guarantee as to the usable capacity available, it is also possible to set limits on containers to prevent workloads using more than a specified amount of capacity. However, for most use cases, I recommend not using Reservations or Limits and simply manage capacity at the Storage Pool layer.

Nutanix also supports VMs with more assigned/used capacity than the node they are running on, for more information see: What if my VMs storage exceeds the capacity of a Nutanix node?

Regardless of what node type/s reside within a Nutanix cluster, there is no advanced settings required to be configured such as Queue Depths, VAAI and multi-pathing, which can be required when mixing legacy storage platforms in the same cluster. There is also no requirement for Storage DRS to manage either performance or capacity as discussed earlier.

Advantage 3: No silos of storage capacity, all capacity is shared in the storage pool

Advantage 4: Storage policies such as RF and Data Reduction can be changed on the fly as required and multiple policies are supported within the same cluster.

For more information about Nutanix data reduction technologies, see: Nutanix Implementation of Data Avoidance & Reduction Technologies

Regardless of the mixture of node types and their respective capacity/performance characteristics, there is no advanced configuration required to achieve optimal performance.

Nutanix automatically manages I/O pathing and as data locality ensures most data is read locally and writes are always written local to the VM and then replicas distributed throughout the cluster, it minimizes the chances of hot spots by default.

In the unlikely event one nodes local SSD tier becomes saturated, NDSF will automatically write data across the shared SSD tier until the local nodes SSD tier has sufficient capacity to resume local writes. This avoids the requirement for a storage admin to take any corrective actions.

Advantage 5: In the unlikely event of saturation of a nodes SSD tier, NDSF automatically redirects new I/O until ILM (tiering) can free up capacity within the local tier.

NDSF natively distributes writes throughout all nodes within the cluster. This means all nodes within heterogeneous clusters increase the capacity, performance and resiliency of the entire cluster.

To increase the performance of a single VM, you have numerous options. All you need to do is migrate (vMotion for ESXi, Live Migration for Hyper-V or Migrate for AHV) to a node with higher spec physical processors, more SSD drives and/or more SATA spindles.

There is no requirement to Storage vMotion, or relocate the VM to a new Datastore/Container. NDSF manages the storage layer automatically and will localize hot data if/when required.

Advantage 6: No silos of storage capacity, all capacity is shared in the storage pool

Advantage 7: All nodes contribute to the capacity, performance and resiliency of the cluster

Heterogeneous clusters are managed by a single HTML 5 GUI called PRISM. There is no need to access multiple management interfaces for different storage types.

Advantage 8: Heterogeneous clusters are managed via a single HTML 5 GUI.

Nutanix also supports Pin to SSD which allows workloads requiring all flash to reside within a hybrid (SSD+SATA) cluster and be guaranteed all flash performance.

VMs or Virtual Disks can also be marked to be stored solely in Flash on the fly if/when required and vice versa.

Advantage 9: No silos required for workloads requiring All Flash performance

Nutanix eliminates the complexity around managing performance at a datastore layer. Nutanix supports up to the chosen hypervisors limits, e.g.: vSphere HA limit is 2048 VMs per datastore. As all controllers within a cluster actively service all datastores (Containers), performance isn’t constrained at a datastore layer like with traditional storage products.

For more information see: Unlimited VMs per datastore? Its not a myth with Nutanix!

Advantage 10: No performance concerns/constraints at the datastore level

What about Considerations for Heterogeneous Clusters?

From a performance perspective, always ensure you size to have your N+x (e.g.: N+1 , N+2 etc) node/s sized >= the largest node in the cluster to ensure in the event of a node failure, workloads benefiting from higher performance nodes can failover to equivalent nodes.

From a capacity perspective, for NDSF to be able to restore the configured RF (RF2 or RF3) in the event of a node failure, sufficient capacity must exist within the storage pool. As such, when using high capacity nodes such as NX-8035s , NX-8150s or NX-6035C storage only nodes, ensure you have >= capacity of the largest node free within the storage pool.

Advantage 11: Performance and availability sizing for heterogeneous clusters is simple.

Another consideration is for mission-critical or high I/O applications, spread these evenly across the nodes and ideally ensure the active working set fits within the local SSD tier. Doing so will maximise performance, but in the event a very large workload cannot fit with the local SSD, its data will resided within the shared SSD tier and be actively serviced by multiple Controller VMs.

For more information about sizing see: Rule of Thumb: Sizing for Storage Performance in the new world.

Advantage 12: The NDSF shared SSD tier ensures in the event a workload exceeds the local SSD capacity that the application still enjoys all flash performance by distributing data intelligently across the cluster.

Over time, when adding new nodes, VMs can be quickly/easily migrated to newer, higher performance/capacity nodes without any preparation. The VMs will immediately benefit from the newer nodes CPU,RAM and storage performance even if most of its data is still stored on older node types.

Older nodes can be non disruptively removed once they are end of life, again without any preparation or administrator intevenston.

Advantage 13: Workloads on NDSF benefit from newer generation nodes immediately without complex design/migration activities.

Summary:

- Nutanix supports and recommends heterogeneous clusters

- No complexity with multi-pathing, it’s optimal out of the box

- No custom per datastore configuration

- VAAI just works, no advanced configuration required due to mixed node types

- No compromise required to mix node types

- No silos of storage capacity, all capacity is shared in the storage pool

- All nodes contribute to performance of the cluster

- No balancing VMs across datastores/storage devices is required to improve performance/resiliency

- NDSF disk balancing ensures the data is evenly distributed throughout the cluster helping avoid hotspots

- The distribution of RF traffic throughout the cluster also helps avoid hotspots

- No silos required for workloads requiring all flash performance

- NDSF ensures VMs can immediately benefit from the addition of newer generation node types

- Nodes can be added/removed without system administrator performing data migrations