In a previous post How to configure Network I/O Control (NIOC) for Nutanix (or any IP Storage) I showed just how easy configuring NIOC is back in the vSphere 5.x days.

In was based around the concepts of Shares and Limits, of which I have always recommended shares which enable fairness while allowing traffic to burst if/when required. NIOC v2 was a Simple, and effective solution for sure.

Enter NIOC V3 in vSphere 6.0.

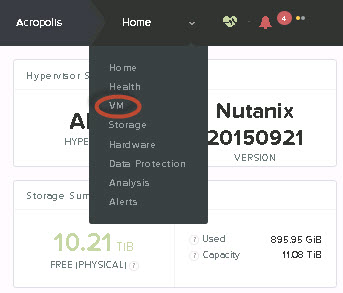

Once you upgrade to NIOC v3 you can no longer use the vSphere C# client and NIOC also now has the concept of bandwidth reservations as shown below:

I am not really a fan of reservations in NIOC or for CPU (memory is good though) and in fact I’ll go as far as to say NIOC was great in vSphere 5.x and I don’t think it needed any changes.

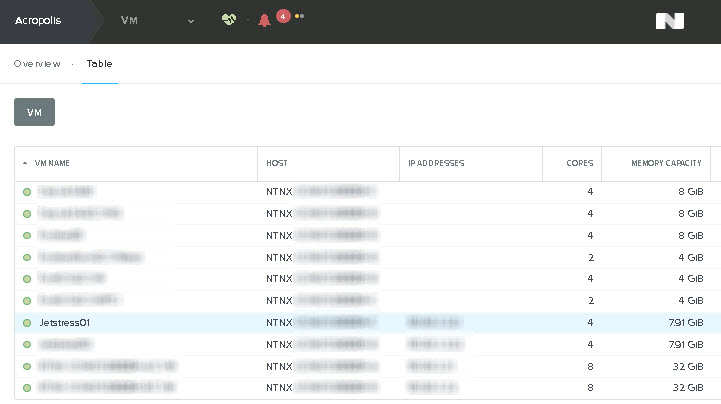

However with vSphere 6.0 Release 2494585 when attempting to create a custom network resource pool under the “Resource Allocation” menu by using the “+” icon (as shown below) you may experience issues.

As shown below, before even pressing the “+” icon to create a network resource pool, the Yellow warning box tells us we need to configure a bandwidth reservation for virtual machine system traffic first.

So my first though was, Ok, I can do this, but why? I prefer using Shares as opposed to Limits or reservations because I want traffic to be able to burst when required and for no bandwidth to be wasted if certain traffic types are not using it.

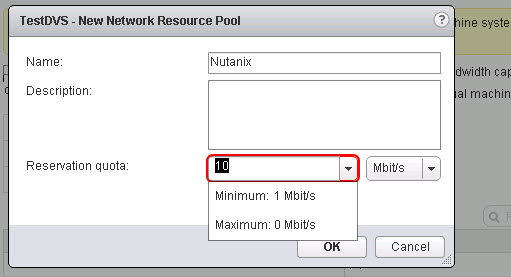

In any case, I followed the link in the warning and went to set a minimal reservation of 10Mbit/s for Virtual machine traffic as shown below.

When pressing “Ok” I was greeted with the below error saying the “Resource settings are invalid”. As shown below I also tried higher reservations without success.

I spoke to a colleague and had them try the same in a different environment and they also experienced the same issue.

I have currently got a call open with VMware Support. They have acknowledge this is an issue and is being investigated. I will post updates as I hear from them so stay tuned.