Now that Acropolis Hypervisor (AHV) has been GA for approx 18 months (with many customers using it in production well before official GA), Nutanix has had a lot of positive feedback about its ease of deployment, management, scaling and performance. However there has been a common theme that customers have wanted the ability to create rules to seperate VMs and to keep VMs together much like vSphere’s DRS functionality.

Since the GA of AHV, it has supported some basic DRS functionality including initial placement of VMs and the ability to restore a VMs data locality by migrating the VM to the node containing the most data locally.

These features work very well, so the affinity and anti-affinity rules were the main pain point. While AHV is not designed to or aimed to be feature parity with ESXi or Hyper-V, DRS style rules is one area where similar functionality makes sense whereas in many other areas, AHV is and will remain very different to legacy hypervisors.

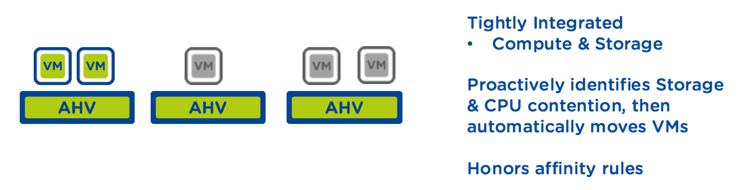

No surprise the AHV scheduler now provides VM/host affinity and anti-affinity rule capabilities which (similar to vSphere DRS) allows for “Should” and “Must” rules to control how the cluster enforces the rules.

Rule types which can be created:

- VM-VM affinity: Keep VMs on a same host.

- VM-VM anti-affinity: Keep VMs on separate hosts.

- VM-Host affinity: Keep a given VM on a group of hosts.

- VM-Host anti-affinity: Keep a given VM out of a group of hosts.

- Affinity and Anti-affinity rules are cross-cluster policies.

- Users can specify MUST as well as SHOULD enforcement of DRS rules

In addition to matching the capabilities of vSphere DRS, the Acropolis X-Fit functionality is also tightly integrated with both the compute and storage layers and works to proactively identify and resolve storage and compute contention by automatically moving virtual machines while ensuring data locality is optimised.

There are many other exciting load balancing capabilities to come so stay tuned as the AHV scheduler has plenty more tricks up its sleeve.

Related .NEXT 2016 Posts

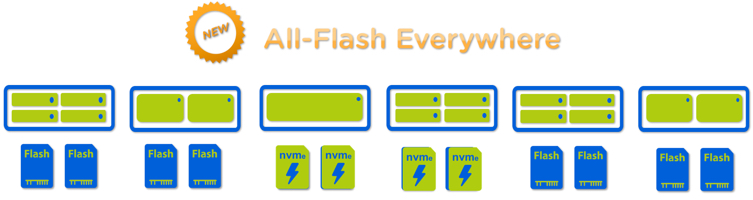

- What’s .NEXT 2016 – All Flash Everywhere!

- What’s .NEXT 2016 – Acropolis File Services

- What’s .NEXT 2016 – Acropolis X-Fit

- What’s .NEXT 2016 – Any node can be storage only

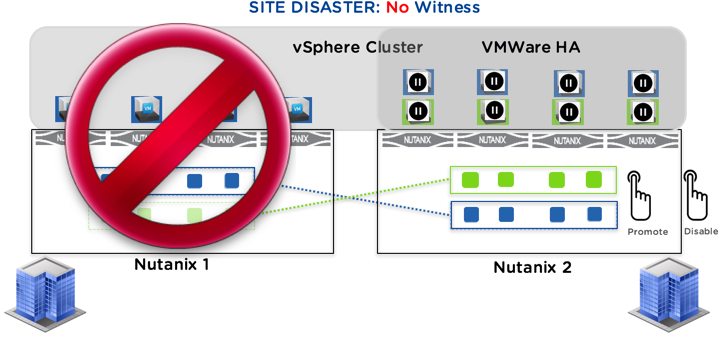

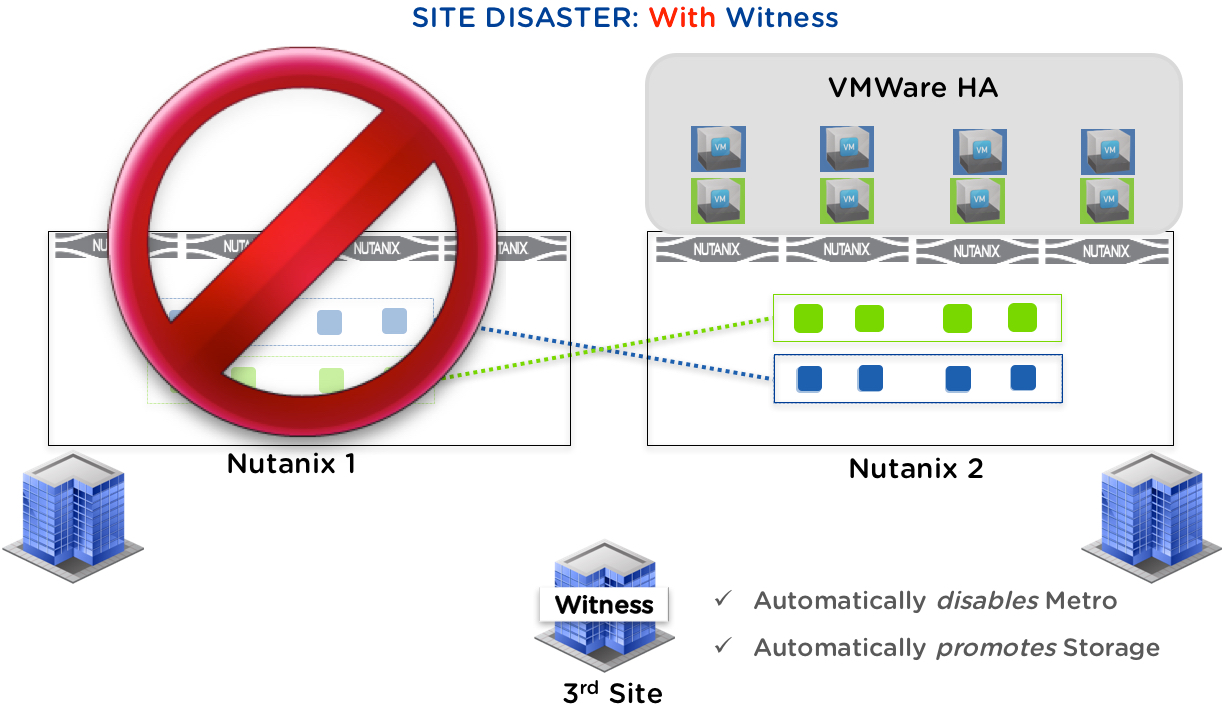

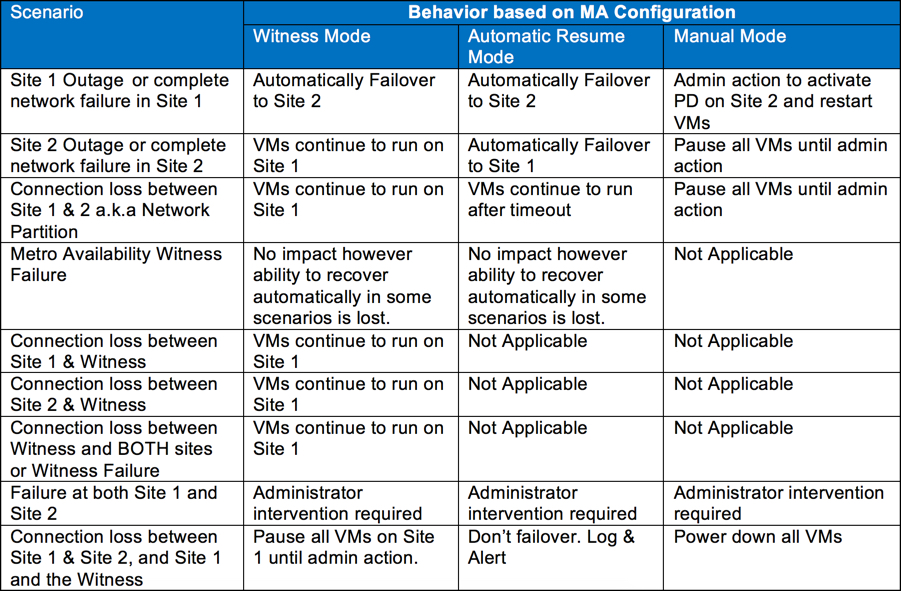

- What’s .NEXT 2016 – Metro Availability Witness

- What’s .NEXT 2016 – PRISM integrated Network configuration for AHV

- What’s .NEXT 2016 – Enhanced & Adaptive Compression

- What’s .NEXT 2016 – Acropolis Block Services

- What’s .NEXT 2016 – Self Service Restore