This is the first of a series of posts covering how the Integrity of I/O is ensured for Virtual Machines when writing to VMDK/s (Virtual SCSI Hard Drives) running on NFS datastores presented via VMware’s ESXi hypervisor as a “Datastore”.

Note: To be crystal clear, this post is not talking about presenting NFS direct to Windows or any other guest operating system.

This process is patented (US7865663) by VMware and its inventors and on the patent the process is called “SCSI Protocol Emulation”.

This series will first cover the topics in a vendor agnostic manner, meaning I am talking in general about VMware + any NFS storage on the VMware HCL with NFS support.

Following the vendor agnostic posts, I will follow with a series of posts focusing specifically on Nutanix, as the motivation for the series was to cover off this topic for existing or potential Nutanix customers, some of whom are less familiar with NFS and have asked for clarification, especially around virtualizing Business Critical Applications (vBCA) such as Microsoft SQL and Exchange.

The below diagram visualizes shows how storage can be presented to an ESXi host and what this series will focus on.

A VM accesses its .vmx and .vmdk file/s via a datastore the same way, regardless of the underlying storage protocol (DAS SCSI, iSCSI , NFS , FCP).

In the case of NFS datastores, SCSI protocol emulation is used to allow the Guest Operating System (OS) and application/s to read and write via SCSI even when the underlying storage (which is abstracted by the hypervisor) is served via NFS which does not natively support the same commands.

In the following section, and throughout this series, many images shown are from the patent (US7865663) and are the property of the patent owners, not the author of this article.

The areas which I will be focusing on are the ones where there has been the most concern in the industry, especially for business critical applications, such as Microsoft SQL and Microsoft Exchange, being how are the VM operating system and application/s (or data integrity) are impacted when issuing commands when the storage is abstracted by the hypervisor and served to via NFS which does not have equivalent I/O commands as SCSI.

Some examples areas of concern around the industry for VMs running on datastores backed by NFS are:

1. SCSI Aborts / Resets

2. Forced Unit Access (FUA) & Write Through

3. Write Ordering

4. Torn I/O (Writes + Reads)

In this first part, we will look at the SCSI Protocol Emulation process and discuss SCSI Aborts and Resets and how the SCSI protocol emulation process deals with these.

Below is a diagram showing the flow of an I/O request for a VM writing SCSI commands to a VMDK (formatted as NTFS) through the SCSI emulation process and through to the NFS storage.

The first few steps in my opinion are fairly self explanatory, where it gets interesting for me, and one of the points of contention among I.T professional (being SCSI aborts) is described in the box labelled “550“.

If the SCSI command is an abort (which has no equivalent in the NFS protocol), the SCSI emulation process removes the corresponding request from the virtual SCSI request list created in the previous step (box labelled “540“).

The same is true if the SCSI command is a reset (which also has no equivalent in the NFS protocol), the SCSI emulation process removes the corresponding request from the virtual SCSI request list. This process is shown below in the box labelled “560”

Next lets look at what happens if the SCSI “abort” or “reset” command is issued after the SCSI emulation process has passed on the command to the storage and is now receiving a reply to a command which the Guest OS / Application has aborted?

Its quite simple, the SCSI emulation process receives a reply from the NFS server, looks up the corresponding tag in the Virtual SCSI request list, and because this corresponding tag does not exist, the emulator drops the reply therefore emulating a SCSI abort command.

The process is shown below from box labelled “710” to “720” and finishing at “730“.

In the patent, the above process is summed up nicely in the following paragraph.

Accordingly, a faithful emulation of SCSI aborts and resets, where the guest OS has total control over which commands are aborted and retried can be achieved by keeping a virtual SCSI request list of outstanding requests that have been sent to the NFS server. When the response to a request comes back, an attempt is made to find a matching request in the virtual SCSI request list. If successful, the matching request is removed from the list and the result of the response is returned to the virtual machine. If a matching request is not found in the virtual SCSI request list, the results are thrown away, dropped, ignored or the like.

So there we have it, that is how VMware’s patented SCSI Protocol emulation allows SCSI commands not supported natively by NFS to be honoured, therefore allowing applications dependant on Block based storage to be ran successfully within a VM where its VMDK is backed by NFS storage.

Let’s recap what we have learned so far.

1. The SCSI Commands, abort & reset have no equivalent in the NFS protocol.

2. The VMware SCSI Emulation process handles SCSI commands not supported natively by NFS thanks to the Virtual SCSI Request List.

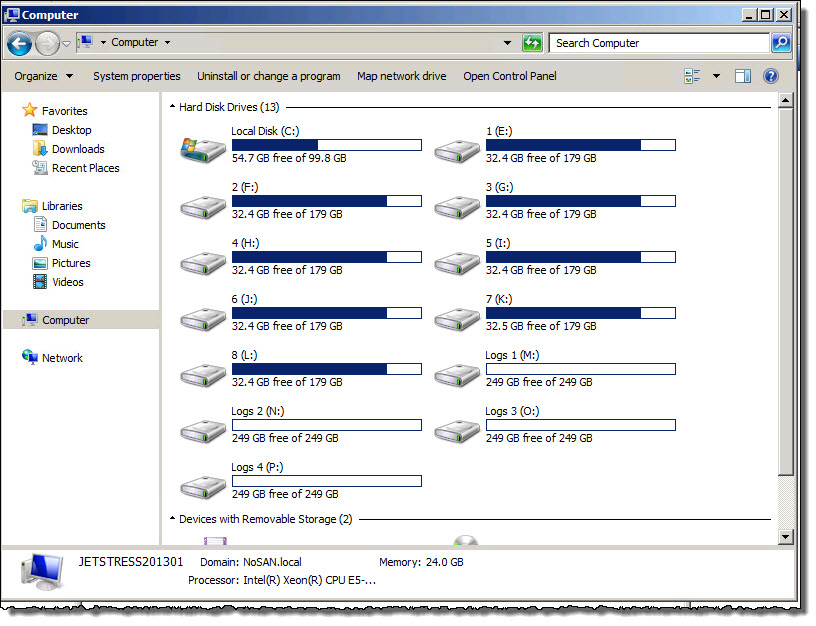

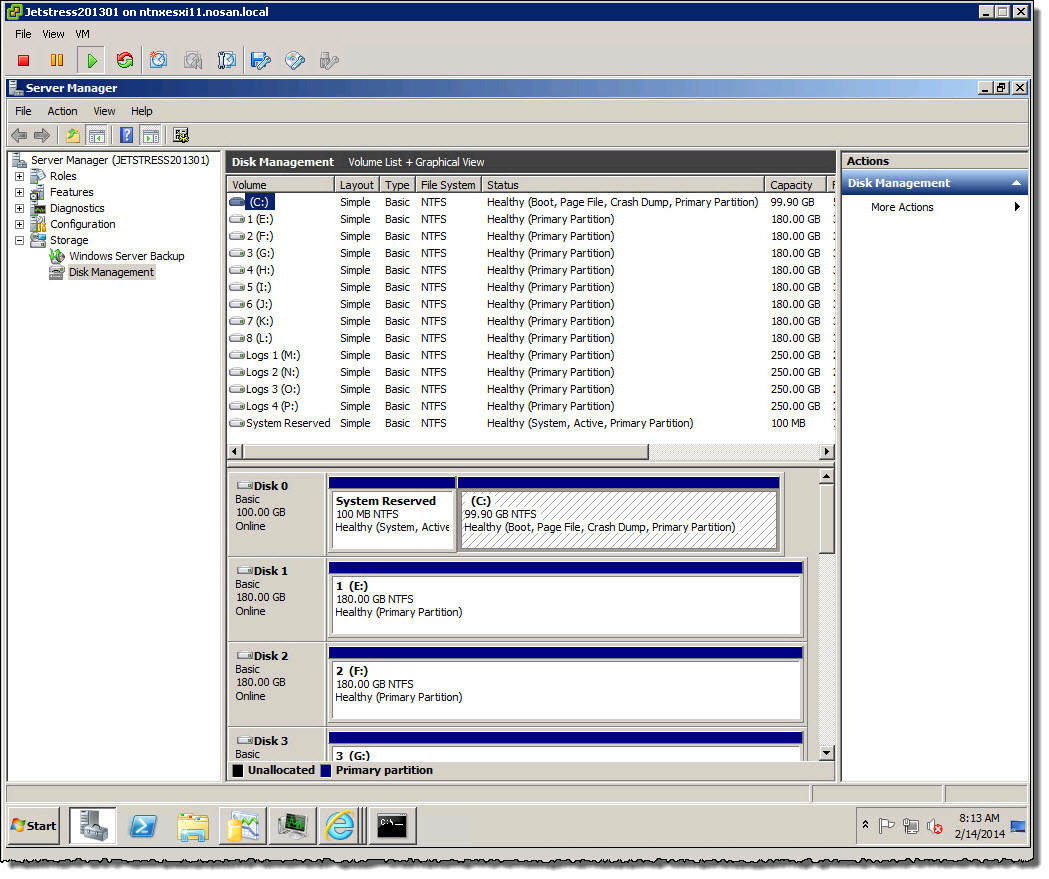

3. Guest Operating Systems and Applications running in Virtual Machines on ESXi issue native SCSI commands to the NTFS volume, which is presented to the VM via a VMDK and housed on an NFS datastore.

4. The underlying NFS protocol is not exposed to the Guest OS, Application/s or Virtual Machine.

5. The SCSI Commands, abort & reset are emulated by the hyper visor through removing these requests from the Virtual SCSI emulation list.

In part two, I will discuss Forced Unit Access (FUA) & Write Through.

Integrity of Write I/O for VMs on NFS Datastores Series

Part 1 – Emulation of the SCSI Protocol

Part 2 – Forced Unit Access (FUA) & Write Through

Part 3 – Write Ordering

Part 4 – Torn Writes

Part 5 – Data Corruption

Nutanix Specific Articles

Part 6 – Emulation of the SCSI Protocol (Coming soon)

Part 7 – Forced Unit Access (FUA) & Write Through (Coming soon)

Part 8 – Write Ordering (Coming soon)

Part 9 – Torn I/O Protection (Coming soon)

Part 10 – Data Corruption (Coming soon)

Related Articles

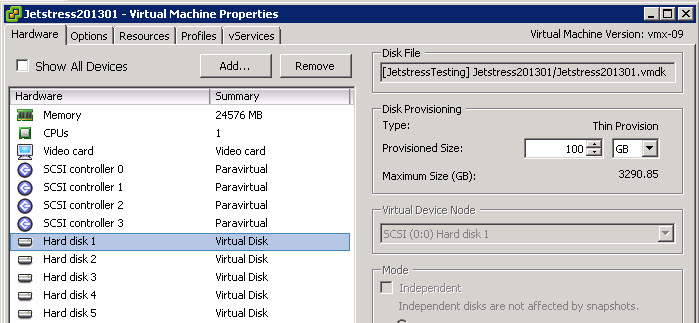

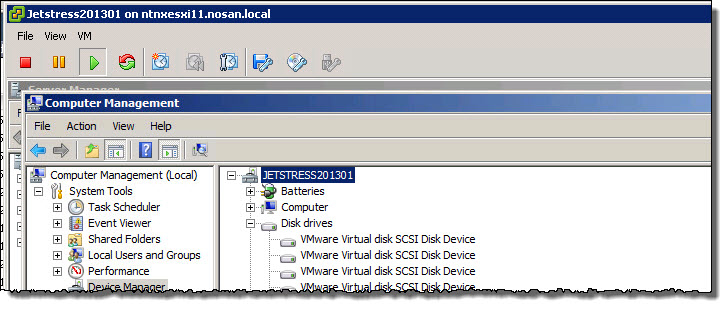

1. What does Exchange running in a VMDK on NFS datastore look like to the Guest OS?

2. Support for Exchange Databases running within VMDKs on NFS datastores (TechNet)

3. Microsoft Exchange Improvements Suggestions Forum – Exchange on NFS/SMB

4. Virtualizing Exchange on vSphere with NFS backed storage?