Even before I achieved my VCDX in May 2012, I had been helping VCDX candidates by doing design reviews and more importantly conducting mock panels.

So over the last couple of years I would estimate I would have been involved with at least 15 candidates, which range from a mock panel and advice over a WebEx, to mentoring candidates through their entire journey.

I would estimate I have conducted easily 30+ mock panels, from which I have decided to put together the most common mistakes candidates make, along with my tips for the defence.

Note: I am not a official panellist and I do not know how the scoring works. The below is my advice based on conducting mock panels, the success rate of candidates I have conducted mock panels with and my successfully achieving VCDX on the 1st attempt.

Common Mistakes

1. Using a Fictitious Design

In all cases where I have done a mock panel for a candidate using a Fictitious design, even in cases where I did not know it was fictitious, it becomes very obvious very quickly.

The reason it is obvious for a mock panellist is due to the lack of depth the candidate can go into about the solution, for example, requirements.

In my experience, candidates using fictitious designs generally take multiple attempts before successfully defending.

This may sound harsh, but if you need to use a fictitious design, you probably don’t have enough architecture experience for VCDX, otherwise you would be able to choose from numerous designs to submit, rather than creating a fictitious design.

Some candidates use fictitious designs for privacy or NDA reasons, in this case, I would strongly recommend you should be able to remove customer specific details and defend a real design.

Note: For those VCDXs who have passed with a fictitious design, I am not in any way taking away from there achievement, if anything, they had more of a challenge that people like me who used a real design.

2. Giving an answer of “I was not responsible for that portion of the design”.

If you give this answer, you are demonstrating that you do not have an expert level understanding of the solution as a whole, which translates to Risk/s as the part of the design you were responsible for may not be compatible with the component/s you were not involved in.

A VCDX candidate may not always be the lead architect on a project, but a VCDX level candidate will always ensure he/she has a thorough understanding of the total solution and will ask the right questions of other architects involved with the project to gain at least a solid level of understanding of all parts of the solution.

With this understanding of other components of the total solution, a candidate should be able to discuss in detail how each component influenced other areas of the design, and what impact (positive or negative) this had on the solution.

3. Not knowing your design!

This is the one which surprises me the most, if your considering submitting for VCDX, or already have submitted and been accepted, you should already know your design back to front, including the areas which you may not have been responsible for.

You should not be dependant on the power point presentation used in your defence as this is really for the benefit of the panellists, not for you to read word for word.

Think about the VCDX panel this way, You (should) know more about your design that the panel does for the simple reason, its your design.

So you have an advantage over the panellists – ensure you maximize this advantage by knowing your design back to front.

If you cannot comfortably talk about your design for 75mins without referring to reference material, you probably should review your design until you can.

4. Not having clear and concise answers of varying depths for the panellists questions

I hear you all saying, how the hell do I know what the panel will ask me? As a VCDX candidate preparing to defend, your basically saying, I am an Expert in virtualization and I want to come and have my expertise validated.

As an Expert (not a Professional or Specialist, but an EXPERT) you should be able to go through your design, and with your reviewers hat on, write down literally 50+ questions that you would ask if you were reviewing this document for somebody else, or indeed, acting as a real or mock panellist.

In my experience, I predicted approx 80% of the questions the panel ended up asking me, which made my defence a much less stressful experience than it may have been otherwise.

Once you have written down these questions (seriously, 50+), you should ask yourself those questions and ensure you have answers to them. The answers you should have, or prepare should be

a) 1st Level – 30 seconds or less which cover the key points at a high level

b) 2nd Level – A further 30 – 60 seconds which expands on (and does not repeat) the 1st level statements

c) 3rd Level – A further 30-60 seconds which is very detailed and shows your deep understanding of the topic.

I would suggest if you don’t have solid 1st and 2nd Level answers to the questions, your probably not ready for VCDX. The 3rd level questions, in some areas you should be strong and be able to go to this depth, in other areas, you may not, but you should prepare regardless and focus on your weak areas.

5. Giving BS answers

I can’t put this any nicer, if you think you can get away with giving a BS answer to the VCDX panel, or even a good mock panellist, your sorely mistaken.

It never ceases to amaze me, people seem to refuse to admit when they don’t know something – nobody knows everything, don’t be afraid to say, I Don’t know.

Don’t waste the precious time that you have to demonstrate your expertise by giving BS answers that will do nothing to help your chances of passing.

In a mock panel situation, take note of any questions your asked, which you dont know, or dont have strong answers too, and review your design, do some research and ensure you understand the topic in detail and can speak about it.

This may result in you finding a weakness in your design which even if your design has been accepted already, you have the chance to highlight these weaknesses in your defence and discuss the implications and what you would/could to differently – this is a great way to demonstrate expertise.

6. Not knowing about Alternatives to your design

If you work for Vendor X, and Vendor X has a pre packaged converged solution, with a cookie cutter reference architecture which you customize for each client, you could have been successfully deploying solutions for years and be an expert in that solution, but this alone doesn’t make you a VCDX, in fact it could mean quite the opposite.

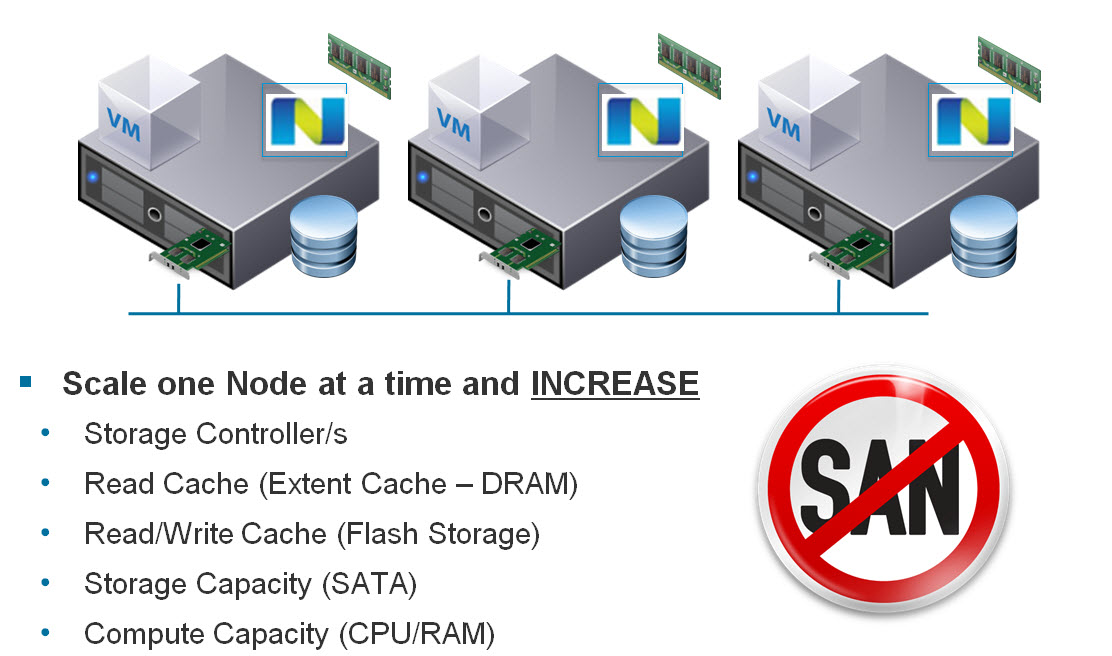

If your solution is a vBlock, with Cisco UCS, EMC storage (FC/FCoE) and vSphere, how would your solution be impacted if the customer at the last min said, we want to use Netapp storage and NFS or what about if the customer dropped EMC/Netapp and went for Nutanix. How would the solution change, what are the pros and cons and how would this impact your vSphere design choices?

If you can’t talk to this, in detail, for example the Pros and Cons of for example

a) Block vs File based storage

b) Blade vs Rack mount

c) Enterprise Plus verses Standard Edition for your environment

d) Isolation response for Block verses IP storage

Then your not a Design Expert, your at best a Vendor X solution specialist.

If you do use a FlexPod, vBlock or Nutanix type solution with a reference architecture (RA) or best practices, you should know the reasons behind every decision in the reference architecture as if you were the person who wrote the document, not just customized it.

Tips for the defence

1. Answer questions before they are asked

Expanding on Common Mistake #4, as previously mentioned, you should be able to work out the vast majority of questions the panel will ask you, by reviewing your design and having others also review your work.

With this information in mind, as you present your architecture, use statements such as

“I used the following configuration for reasons X,Y,Z as doing so mitigated risks 1,2,3 and met the requirements R01,R02”.

This technique allows you to demonstrate expertise by showing you understand why you made a decision, the risks you mitigated and the requirements you met, without being asked a single question.

So what are the advantages of doing this?

a) You demonstrate expertise while saving time therefore maximizing your chance of a passing mark

b) You can prepare these statements, and potential avoid being interrupted and loosing your train of thought.

2. When asked a question, don’t be too long winded.

As mentioned in Common Mistake #4, preparing short concise answers is critical. Don’t give a 5 min answer to a question as this is likely to be wasting time. Give your level 1 answer which should cover the key concepts and decision points in around 30 seconds, and if the panel drills down further, give your Level 2 answer which expands on the Level 1 answer, and so on.

This means you can maximize your time to maximize your score in other areas. If the panel is not satisfied with your answer, they will ask it again, time permitting.

Which leads us nicely onto the next item:

3. If a question is asked twice, go straight to Level 2 answer

If the panel has asked you a question, and you gave the Level 1 answer, and later on your asked the same question again, its possible you gave an unclear or incorrect answer the first time, so now is your chance to correct a mistake or improve your score.

Think about then answer you gave previously, if you made a mistake for any reason, call it out by saying something like, “Earlier I mistakenly said X, however the fact/s are….”

This will show the panel you know you made a mistake, and you do in fact know the correct answer or the topic in question.

4. Near the end of your Defence give more detailed answers

In the first half of the 75mins, giving your Level 1 and maybe Level 2 answers allows you to save time and maximize your score across all areas.

As you get pass the half way mark and nearing the 20 min remaining mark, at this stage, you should have gone over most areas of your design and now is the chance to maximize your score.

When asked questions at this stage, I would suggest the Level 2/3 answers are what you should be giving. Where you may have given a good Level 1 answer, now is the chance to move from a good answer, to a great answer and maximize your score.

Summary

I hope the above tips help you prepare for the VCDX defence and best of luck with your VCDX journey. For those who are interested, you can read about My VCDX Journey.

In Part 2, I will go through Preparing for the Design Scenario, and how to maximize your 30 mins.