Back in 2014, I wrote about Hardware support contracts & why 24×7 4 hour onsite should no longer be required. For those of you who haven’t read the article, I recommend doing so prior to reading this post.

In short, the post talked about the concept of the typical old-school requirement to have expensive 24/7, 2 or 4-hour maintenance contracts and how these become all but redundant when IT solutions are designed with appropriate levels of resiliency and have self-healing capabilities capable of meeting the business continuity requirements.

Some of the key points I made regarding hardware maintenance contracts included:

a) Vendors failing to meet SLA for onsite support.

b) Vendors failing to have the required parts available within the SLA.

c) Replacement HW being refurbished (common practice) and being faulty.

d) The more propitiatory the HW, the more likely replacement parts will not be available in a timely manner.

All of these are applicable to all vendors and can significantly impact the ability to get the IT infrastructure back online or back to a resilient state where subsequent failures may be tolerated without downtime or data loss.

I thought with the current Coronavirus pandemic, it’s important to revisit this topic and see what we can do to improve the resiliency of our critical IT infrastructure and ensure business continuity no matter what the situation.

Let’s start with “Vendors failing to meet SLA for onsite support.”

At the time of writing, companies the world over are asking employees to work from home and operate on skeleton staff. This will no doubt impact vendor abilities to provide their typical levels of support.

Governments are also encouraging social distance – that people isolate themselves and avoid unnecessary travel.

We would be foolish to assume this won’t impact vendor abilities to provide support, especially hardware support.

What about Vendors failing to have the required parts available within the SLA?

Currently I’m seeing significantly reduced flights operating, e.g.: From USA to Europe which will no doubt delay parts shipment to meet the target service level agreements.

Regarding vendors using potentially faulty refurbished (common practice) hardware, this risk in itself isn’t increased, but if this situation occurs, then the delays for shipment of alternative/new parts is likely going to be delayed.

Lastly, infrastructure leveraging propitatory HW makes it more likely that replacement parts will not be available in a timely manner.

What are some of the options Enterprise Architects can offer their customers/employers when it comes to delivering highly resilient infrastructure to meet/exceed business continuity requirements?

Let’s start with the assumption that replacement hardware isn’t available for one week, which is likely much more realistic than same-day replacement for the majority of customers considering the current pandemic.

Business Continuity Requirement #1: Infrastructure must be able to tolerate at least one component failure and have the ability to self heal back to a resilient state where a subsequent failure can be tolerated.

By component failure, I’m talking about things like:

a) HDD/SSDs

b) Physical server/node

c) Networking device such as a switch

d) Storage controller (SAN/NAS controllers, or in the case of HCI, a node)

HDDs/SSDs have been traditionally protected by using RAID and Hot Spares, although this is becoming less common due to RAID’s inherent limitations and high impact of failure.

For physical servers/nodes, products like VMware vSphere, Microsoft Hyper-V and Nutanix AHV all have “High Availability” functions which allow virtual machines to recover onto other physical servers in a cluster in the event of a physical server failure.

For networking, typically leaf/spine topologies provide a sufficient level of protection with a minimum of dual connections to all devices. Depending on the criticality of the environment, quad connections may be considered/required.

Lastly with Storage Controllers, traditional dual controller SAN/NAS have a serious constraint when it comes to resiliency in that they require the HW replacement to restore resiliency. This is one reason why Hyper-CXonverged Infrastructure (a.k.a HCI) has become so popular: Some HCI products have the ability to tolerate multiple storage controller failures and continue to function and self-heal thanks to their distributed/clustered architecture.

So with these things in mind, how do we meet our Business Continuity Requirement?

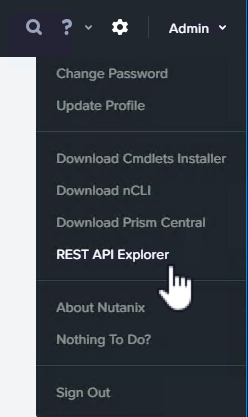

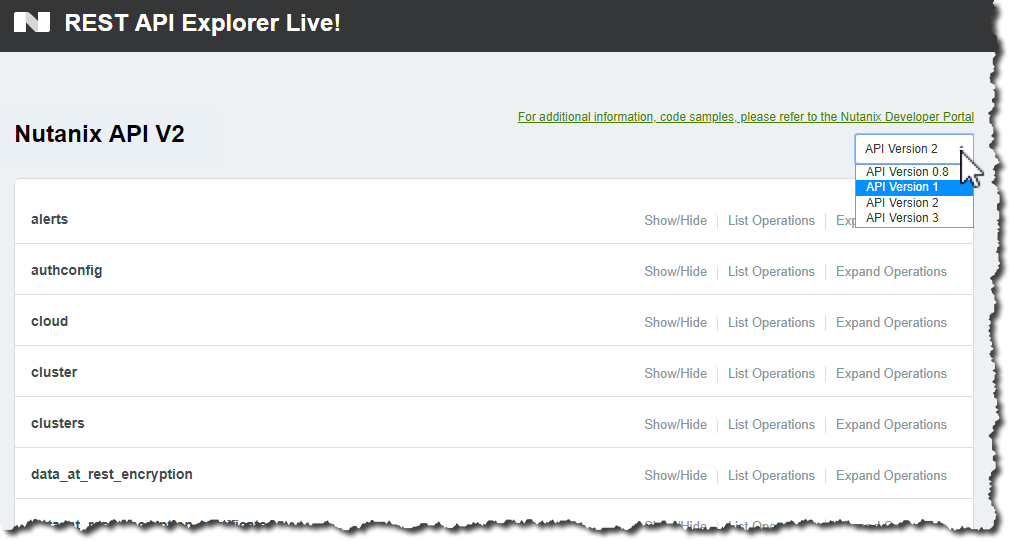

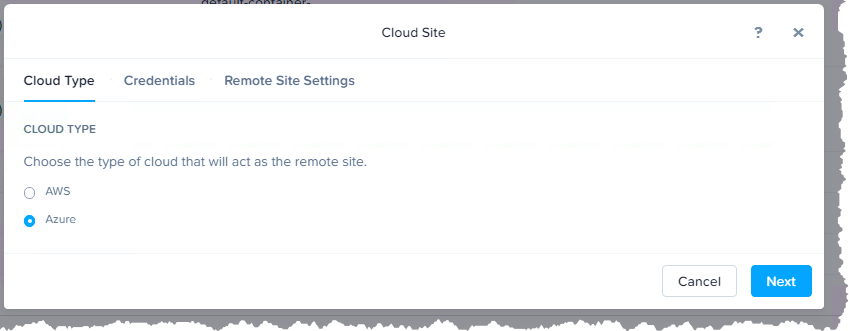

Disclaimer: I work for Nutanix, a company that provides Hyper-Converged Infrastructure (HCI), so I’ll be using this technology as my example of how resilient infrastructure can be designed. With that said the article and the key points I highlight are conceptual and can be applied to any environment regardless of vendor.

For example, Nutanix uses a Scale Out Shared Nothing Architecture to deliver highly resilient and self healing capabilities. In this example, Nutanix has a small cluster of just 5 nodes. The post shows the environment suffering a physical server failure, and then self healing both the CPU/RAM and Storage layers back to a fully resilient state and then tolerating a further physical server failure.

After the second physical server failure, it’s critical to note the Nutanix environment has self healed back to a fully resilient state and has the ability to tolerate another physical server failure.

In fact the environment has lost 40% of its infrastructure and Nutanix still maintains data integrity & resiliency. If a third physical server failed, the environment would continue to function maintaining data integrity, though it may not be able to tolerate a subsequent disk failure without data becoming unavailable.

So in this simple example of a small 5-node Nutanix environment, up to 60% of the physical servers can be lost and the business would continue to function.

With all these component failures, it’s important to note the Nutanix platform self healing was completed without any human intervention.

For those who want more technical detail, checkout my post which shows Nutanix Node (server) failure rebuild performance.

From a business perspective, a Nutanix environment can be designed so that the infrastructure can self heal from a node failure in minutes, not hours or days. The platform’s ability to self heal in a timely manner is critical to reduce the risk of a subsequent failure causing downtime or data loss.

Key Point: The ability for infrastructure to self heal back to a fully resilient state following one or more failures WITHOUT human intervention or hardware replacement should be a firm requirement for any new or upgraded infrastructure.

So the good news for Nutanix customers is during this pandemic or future events, assuming the infrastructure has been designed to tolerate one or more failures and self heal, the potential (if not likely) delay in hardware replacements is unlikely to impact business continuity.

For those of you who are concerned after reading this that your infrastructure may not provide the business continuity you require, I recommend you get in touch with the vendor/s who supplied the infrastructure and go through and document the failure scenarios and what impact this has on the environment and how the solution is recovered back to a fully resilient state.

Worst case, you’ll identify gaps which will need attention, but think of this as a good thing because this process may identify issues which you can proactively resolve.

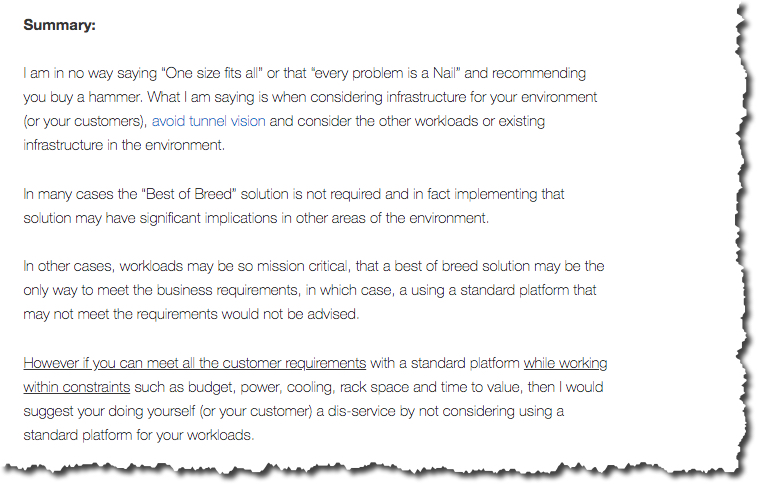

Pro Tip: Where possible, choose a standard platform for all workloads.

As discussed in “Thing to consider when choosing infrastructure”, choosing a standard platform to support all workloads can have major advantages such as:

- Reduced silos

- Increased infrastructure utilisation (due to reduced fragmentation of resources)

- Reduced operational risk/complexity (due to fewer components)

- Reduced OPEX

- Reduced CAPEX

The article summaries by stating:

“if you can meet all the customer requirements with a standard platform while working within constraints such as budget, power, cooling, rack space and time to value, then I would suggest you’re doing yourself (or your customer) a dis-service by not considering using a standard platform for your workloads.”

What are some of the key factors to improve business continuity?

- Keep it simple (stupid!) and avoid silos of bespoke infrastructure where possible.

- Design BEFORE purchasing hardware.

- Document BUSINESS requirements AND technical requirements.

- Map the technical solution back to the business requirements i.e.: How does each design decision help achieve the business objective/s.

- Document risks and how the solution mitigates & responds to the risks.

- Perform operational verification i.e.: Validate the solution works as designed/assumed & perform this testing after initial implementation & maintenance/change windows.

Considerations for CIOs / IT Management:

- Cost of performance degradation such as reduced sales transactions/minute and/or employee productivity/moral

- Cost of downtime like Total outage of IT systems inc Lost revenue & impact to your brand

- Cost of increased resiliency compared to points 1 & 2

- I.e.: It’s often much cheaper to implement a more resilient solution than suffer even a single outage annually

- How employees can work from home and continue to be productive

Here’s a few tips to ask your architect/s when designing infrastructure:

- Document failure scenarios and the impact to the infrastructure.

- Document how the environment can be upgraded to provide higher levels of resiliency.

- Document the Recovery Time (RTO) and Recovery Point Objectives (RPO) and how the environment meets/exceeds these.

- Document under what circumstances the environment may/will NOT meet the desired RPO/RTOs.

- Design & Document a “Scalable and repeatable model” which allows the environment to be scaled without major re-design or infrastructure replacement to cater for unforeseen workload (e.g.: Such as a sudden increase in employees working from home).

- Avoid creating unnecessary silos of dissimilar infrastructure

Related Articles:

- Scale Out Shared Nothing Architecture Resiliency by Nutanix

- Hardware support contracts & why 24×7 4 hour onsite should no longer be required.

- Nutanix | Scalability, Resiliency & Performance | Index

- Nutanix vs VSAN / VxRAIL Comparison Series

- How to Architect a VSA , Nutanix or VSAN solution for >=N+1 availability.

- Enterprise Architecture and avoiding tunnel vision