While Virtualization of MS Exchange is now common across multiple hypervisors it continues to be a hotly debated topic. The most common objections being cost (CAPEX), the next being complexity (which translates to CAPEX & OPEX) and the third being that virtualization adds minimal value as MS Exchange provides application level high availability. The other objection I hear is Virtualization isn’t supported, which always makes me laugh.

In my experience, the above objections are typically given in the context of a dedicated MS Exchange environment, which in that specific context some of the points have some truth, but the question becomes, how many customers run only MS Exchange? In my experience, None.

Customers I see typically run tens, hundreds even thousands of workloads in their datacenters so architecting silos for each application is what actually leads to cost & complexity when we think outside the box.

Since most customers have virtualization and want to remove silos in favour of a standarized platform, MS Exchange is just another Business Critical Application which needs to be considered.

Let’s discuss each of the common objections and how I believe Acropolis + Nutanix XCP addresses these challenges:

Microsoft Support for Virtualization

For some reason, there is a huge amount of FUD regarding Microsoft support for Virtualization (other than Hyper-V), but Nutanix + Acropolis is certified under the Microsoft Server Virtualization Validation Program (SVVP) and runs on block storage via iSCSI protocol, so Nutanix + Acropolis is 100% supported for MS Exchange as well as other workloads like Sharepoint & SQL.

Cost (CAPEX)

Unlike other hypervisors and management solutions, Acropolis and Acropolis Hypervisor (AHV) come free with every Nutanix node which eliminates the licensing cost for the virtualization layer.

Acropolis management components also do not require purchase or installation of Tier 1 database platforms, all required management components are built into the distributed platform and scaled automatically as clusters are expanded. As a result, even licenses for Windows operating system are not required.

As a result, Nutanix + Acropolis gives Exchange deployments all the Virtualization features (below) which provide benefits at no cost.

- High Availability & Live Migration

- Hardware abstraction

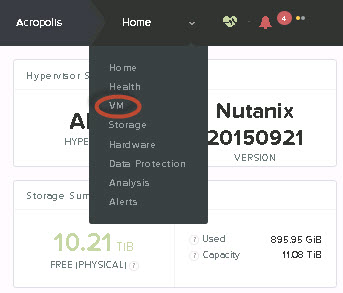

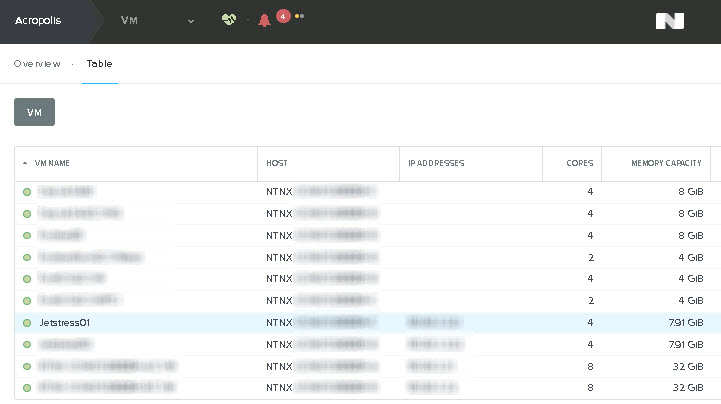

- Performance monitoring

- Centralized management

Complexity (CAPEX & OPEX)

Nutanix XCP + Acropolis can be deployed in a fully optimal configuration from out of the box to operational in less than 60 minutes. This includes all required management components which are automatically deployed as part of the Nutanix Controller VM (CVM). For single cluster environments, no design/installation is required for any management components, and for multiple-cluster environments, only a single virtual appliance (PRISM Central) is required for single pane of glass management across all clusters.

Acropolis gives Exchange deployments all the advantages of Virtualization without:

- Complexity of deploying/maintaining of database server/s to support management components

- Deployment of dedicated management clusters to house management workloads

- Having onsite Subject Matter Experts (SMEs) in Virtualization platform/s

Virtualization adds minimal value

While applications such as Exchange have application level high availability, Virtualization can further improve resiliency and flexibility for the application while making better use of infrastructure investments.

The Nutanix XCP including Acropolis + Acropolis Hypervisor (AHV) ensures infrastructure is completely abstracted from the Operating System and Application allowing it to deliver a more highly available and resilient platform.

Microsoft advice is to limit the maximum compute resources per Exchange server to 24 CPU cores and 96GB RAM. However with CPU core counts continuing to increase, this may result in larger numbers of servers being purchased and maintained where an application specific silo is deployed. This would lead to increased datacenter and licensing costs not to mention operational overhead of managing more infrastructure. As a result, being able to run Exchange alongside other workloads in a mixed environment (where contention can easily be avoided) reduces the total cost of infrastructure while providing higher levels of availability to all workloads.

Virtualization allows Exchange servers to be sized for the current workload and resized quickly and easily if/when required which ensures oversizing is avoided.

Some of the benefits include:

- Minimizing infrastructure in the datacenter

- Increasing utilization and therefore value for money of infrastructure

- Removal of application specific silos

- Ability to upgrade/replace/performance maintenance on hardware with zero impact to application/s

- Faster deployment of new Exchange servers

- Increase availability and provide higher fault tolerance

- Self-healing capabilities at the infrastructure layer to compliment application level high availability

- Ability to increase Compute/Storage resources beyond that of the current underlying physical server (Nutanix node) e.g.: Add storage capacity/performance

The Nutanix XCP Advantages (for Exchange)

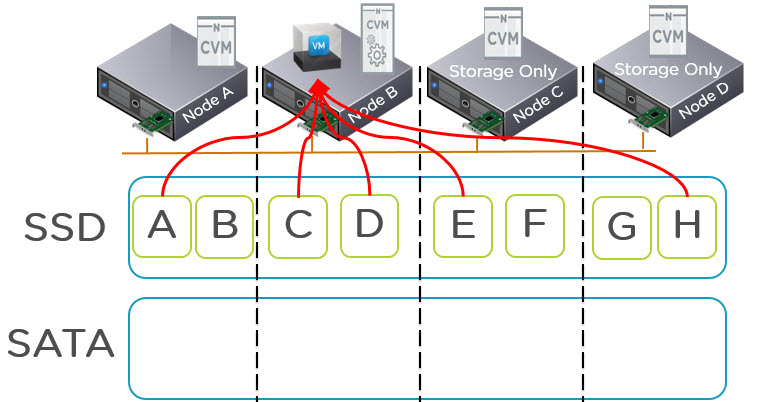

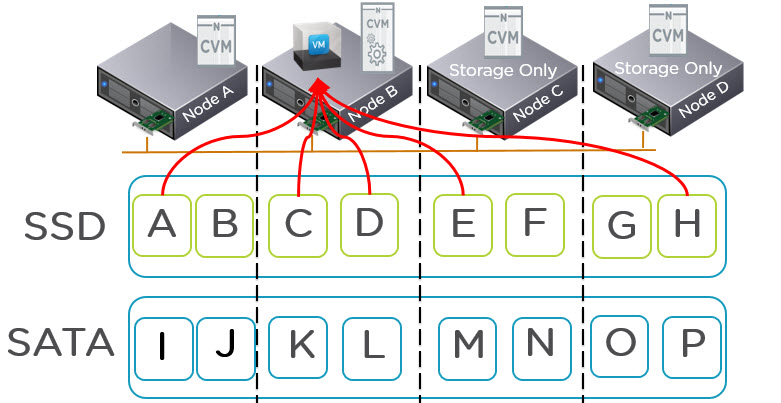

With features such as In-Line compression giving between 1.3:1 and 1.7:1 capacity savings & Erasure Coding providing up to a further 60% usable capacity, Nutanix XCP can provide more usable capacity than RAW while providing protection from SSD/HDD and entire server failures.

In-Line compression also improved performance of the SATA drives, so its a Win/Win. Erasure coding (EC-X) stores data in a more efficient manner which allows more data to be served from the SSD tier, also a Win/Win.

- More Messages/Day and/or Users per physical CPU core

With all Write I/O serviced by SSD the CPU WAIT time is significantly reduced which frees up the physical CPU to perform other activities rather than waiting for a slow SATA drive to respond. As MS Exchange is CPU intensive (especially from 2013 onwards) this means more Messages per Day and/or Users can be supported per MSR VM compared to physical servers.

As Nutanix XCP is a hybrid platform (SSD+SATA), newer/hotter data is serviced by the SSD tier which means faster response times for users AND less CPU WAIT which also helps further increase CPU efficiencies, again leading to more Messages/Day and/or Users per CPU core.

Summary:

With Cost (CAPEX), Complexity (CAPEX & OPEX) and supportability issues well and truly addressed and numerous clear value adds, running a business critical application like MS Exchange on Nutanix + Acropolis Hypervisor (AHV) will make a lot of sense for many customers.