Now continuing from Part 1, lets look at another one of VCE COO Todd Pavone’s statements from the COO: VCE converged infrastructure not affected by Dell-EMC article:

We believe that there was a major gap in the core data center for hyper-converged, where customers wanted hyper-converged architecture — they don’t want to invest in tier-one storage or tier-one servers. They want the intelligence in the software, but they also want massive scale. This is for globals, large service providers in a massive scale, like thousands of nodes. We have a large financial service company in New York that is using us for a platform-free application build-up. And they want to pilot it with 10,000 users, but it’s going to go to 10 million users. And so, can we give them an infrastructure for 10,000, but can scale simply and easily to 10 million — or 20 million?

You can’t do that on an appliance, right? But they want hyper-converged. When you get to 10 million users, you want an infrastructure that scales and is nonlinear, leading to a lower cost model. So, we said, “There’s a gap in that market,” and we created the rack.

Let’s again address these points:

- Todd: “They don’t want to invest in tier-one storage or tier-one servers. They want the intelligence in the software, but they also want massive scale.”

If customers don’t want to invest in what I would call “traditional” tier one storage and servers, them I’d have to agree with them they need a very different solution, such as Nutanix if they want to get to massive scale, especially if they want easy management & deployment.

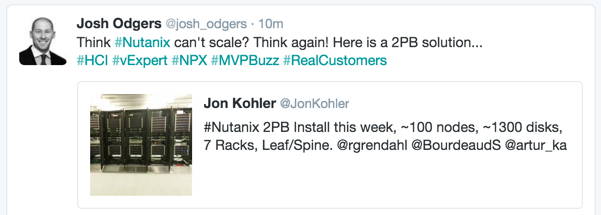

Nutanix has customers ranging from 3 to thousands of nodes, in fact many of our large customers run Acropolis Hypervisor. So any question about scalability for Nutanix is just laughable.

- Todd: “And they want to pilot it with 10,000 users, but it’s going to go to 10 million users. And so, can we give them an infrastructure for 10,000, but can scale simply and easily to 10 million — or 20 million? You can’t do that on an appliance, right?”

Well, you can with Nutanix! In fact that sounds like a common use case for Nutanix, we frequently design and pilot repeatable models and then scale as required.

- Todd: “But they want hyper-converged. When you get to 10 million users, you want an infrastructure that scales and is nonlinear, leading to a lower cost model. So, we said, “There’s a gap in that market,” and we created the rack.”

It’s no surprise to me at all that customers want Hyperconverged and the ability to scale both linearly and non linearly. Nutanix can do this today and has been able to do it for a long time. Back in 2013 for example, you could mix NX3000 series being Compute heavy / Storage Light with NX6000 nodes which are Compute light and Storage Heavy. This is an example of non linear scaling which achieves the reduced cost (e.g.: Cost/GB) over time.

Then in 2014 an even wider range of nodes were released (NX1000, NX3000, NX6000 & NX8000) which enhanced Nutanix ability to scale both up and out, linearly and non linearly.

In 2015 Nutanix launched the NX-6035C “Storage Only” node which allows customers to Scale Storage separately to Compute, ensuring non linear scaling compute vs storage for customers with high capacity requirements. Importantly, no hypervisor licensing is required to scale storage as storage only nodes run Acropolis Hypervisor (AHV) which is fully interoperable with ESXi and Hyper-V environments.

Remember the Rule of thumb: Don’t scale capacity without scaling storage controllers!

Nutanix Storage Only nodes run a light weight Controller VM (CVM) to ensure Management, Monitoring and Data services (e.g.: Disk Balancing, Compression, Dedupe, Erasure Coding etc) do not degrade even when scaling compute and storage in a vastly non linear manner. Storage only nodes also help improve performance by participating in cluster replication (RF2/RF3) and disk balancing activities.

- Todd: “So, we said, “There’s a gap in that market,” and we created the rack.”

There may have been a gap back in early 2013, but since then Nutanix has continued to innovate and lead the market with solutions to scale both linearly and non linearly, I’d say the gap has long been filled. Nutanix also scales management with a single HTML 5 GUI called PRISM, with central management of multiple clusters/sites/geographical locations via PRISM central.

Summary:

I’m sure it’s pretty obvious by now VCE COO Todd Pavone and I have different opinions on what HCI is capable of. During my time at Nutanix I have seen countless successful small, medium and large scale mission-critical application deployments and the percentage of Nutanix business from these workloads continues to increase thanks to our investment in a dedicated vBCA team which I am fortunate to be a part of.

Next time you’re considering new infrastructure for mission critical application, reach out and I’ll happily work with you and see if Nutanix is a good fit for your use case.

Let me finish by saying, I can guarantee you that if in the unlikely event the workload/s are not suitable for Nutanix, I will be the first one to tell you, and help you find an alternate solution.