Nutanix has a number of critically important & unique self healing capabilities which differentiate the platform from not only traditional SAN/NAS arrays but other HCI products.

Nutanix can fully automatically self heal not only from the loss of SSDs/HDDs/NVMe devices and node failure/s but also fully recover the management stack (PRISM) without user intervention.

First let’s go through the self healing of the data from device/node failure/s.

Let’s take a simple comparison between a traditional dual controller SAN and the average* size Nutanix cluster of eight nodes.

*Average is calculated by number of customers globally divide total nodes sold.

In the event of a single storage controller failure, the SAN/NAS is left with no resiliency and is at the mercy of the service level agreement (SLA) with the vendor to replace the component before resiliency (and in many cases performance) can be restored.

Compare that to Nutanix, and only one of the eight storage controllers (or 12.5%) are offline, leaving seven to continue serving the workloads and automatically restore resiliency, typically in just minutes as Part 1 demonstrated.

I’ve previously written a blog titled Hardware support contracts & why 24×7 4 hour onsite should no longer be required which covers this concept in more detail, but long story short, if restoring resiliency of a platform is dependant on the delivery of new parts, or worse, human intervention, the risk of downtime or dataloss is exponentially higher than a platform which can self heal back to a fully resilient state without HW replacement or human intervention.

Some people (or competitors) might argue, “What about a smaller (Nutanix) cluster?”.

I’m glad you asked, even a four node cluster can suffer a node failure and FULLY SELF HEAL into a resilient three node cluster without HW replacement or human intervention.

The only scenario where a Nutanix environment cannot fully self heal to a state where another node failure can be tolerated without downtime is a three node cluster. BUT, in a three node cluster, one node failure can be tolerated and data will be re-protected and the cluster will continue to function with just two nodes but a subsequent failure would result in downtime, but critically no data loss would occur.

Critically, Drive failures can still be tolerated in a degraded state where only two nodes are running.

Note: In the event of a node failure in a three node vSAN cluster, data is not re-protected and remains at risk until the node is replaced AND the rebuild is complete.

The only prerequisite for Nutanix to be able to perform the complete self heal of data (and even the management stack, PRISM) is that sufficient capacity exists within the cluster. How much capacity you ask, I recommend N-1 for RF2 configurations, or N+2 for RF3 configurations assuming two concurrent failures orone failure followed by a subsequent failure.

So worst case scenario for the minimum size cluster would be 33% for RF2 and 40% for a five node RF3 cluster. However, before the competitors break out the Fear, Uncertainty and Doubt (FUD), let’s look at how much capacity is required for self healing as the cluster sizes increase.

The following table shows the percentage of capacity required to fully self heal based on N+1 and N+2 for cluster sizes up to 32 nodes.

Note: These values assume the worst case scenario that all nodes are at 100% capacity so in the real world the overhead will be lower that the table indicates.

As we can see, for an average size (eight node) cluster, the free space required is just 13% (rounded up from 12.5%).

If we take N+2 for an eight node cluster, the MAXIMUM free space required to tolerate two node failures and a full rebuild to a resilient state is still just 25%.

It is important to note that thanks to Nutanix Distributed Storage Fabric (ADSF), the free space does not need to account for large objects (e.g.: 256GB) as Nutanix uses 1MB extents which are evenly distributed throughout the cluster, so there is no wasted space due to fragmentation unlike less advanced platforms.

Note: The size of nodes in the cluster does not impact the capacity required for a rebuild.

A couple of advantages ADSF has over other platforms is that Nutanix does not have the concept of a “cache drive” or the construct of “disk groups”.

Using disk groups is a high risk to resiliency as a single “cache” drive failure can take an entire disk group (made up of several drives) offline forcing a much more intensive rebuild operation than is required. A single drive failure in ADSF is just that, a single drive failure and only the data on that drive needs to be rebuild, which is of course done in an efficient distributed manner (i.e.: A “Many to Many” operation as opposed to a “One to One” like other products).

The only time when a single drive failure causes an issue on Nutanix is with single SSD systems in which it’s the equivalent of a node failure, but to be clear this is not a limitation of ADSF, just that of the hardware specification chosen.

For production environments, I don’t recommend the use of single SSD systems as the Resiliency advantages outweigh the minimal additional cost of a dual SSD system.

Interesting point: vSAN is arguably always a single SSD system since a “Disk group” has just one “cache drive” making it a single point of failure.

I’m frequently asked what happens after a cluster self heals and another failure occurs. Back in 2013 when I started with Nutanix I presented a session at vForum Sydney where I covered this topic in depth. The session was standing room only and as a result of it’s popularity I wrote the following blog post which shows how a five node cluster can self heal from a failure into a fully resilient four node cluster and then tolerate another failure and self heal to a three node cluster.

This capability is nothing new and is far and away the most resilient architecture in the market even compared to newer platforms.

When you need to allow for failures of constructs such as “Disk Groups”, the amount of free space you need to reserve for failures in much higher as we can learn from a recent VMware vSAN article titled “vSan degraded device handling“.

Two key quotes to consider from the article are:

we strongly recommend keeping 25-30% free “slack space” capacity in the cluster.

If the drive is a cache device, this forces the entire disk group offline

When you consider the flaws in the underlying vSAN architecture it becomes logical why VMware recommend 25-30% free space in addition to FTT2 (three copies of data).

Next let’s go through the self healing of the Management stack from node failures.

All components which are required to Configure, Manage, Monitor, Scale and Automate are fully distributed across all nodes within the cluster. There is no requirement for customers to deploy management components for core functionality (e.g.: Unlike vSAN/ESXi which requires VSAN).

There is also no need for users to make the management stack highly available, again unlike vSAN/ESXi.

As a result, there is no single point of failure with the Nutanix/Acropolis management layer.

Lets take a look at a typical four node cluster:

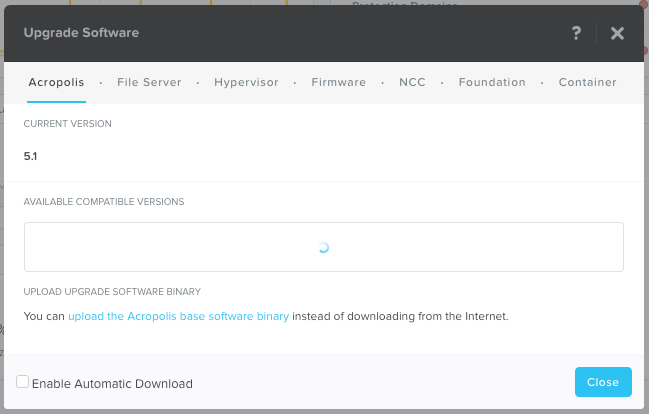

Below we see four Controller VMs (CVMs) which service one node each. In the cluster we have an Acropolis Master along with multiple Acropolis Slave instances.

In the event the Acropolis Master becomes unavailable for any reason, an election will take place and one of the Acropolis Slaves will be promoted to Master.

This can be achieved because Acropolis data is stored in a fully distributed Cassandra database which is protected by the Acropolis Distributed Storage Fabric.

When an additional Nutanix node is added to the cluster, an Acropolis Slave is also added which allows the workload of managing the cluster to be distributed, therefore ensuring management never becomes a point of contention.

Things like performance monitoring, stats collection, Virtual Machine console proxy connections are just a few of the management tasks which are serviced by Master and Slave instances.

Another advantage of Nutanix is that the management layer never needs to be sized or scaled manually. There is no vApp/s , Database Server/s, Windows instances to deploy, install, configure, manage or license, therefore reducing cost and simplifying management of the environment.

Key point:

- The Nutanix Acropolis Management stack is automatically scaled as nodes are added to the cluster, therefore increasing consistency , resiliency, performance and eliminating potential for architectural (sizing) errors which may impact manageability.

The reason I’m highlighting a competitors product is because it’s important for customers to understand the underlying differences especially when it comes to critical factors such as resiliency for both the data and management layers.

Summary:

Nutanix ADSF provides excellent self healing capabilities without the requirement for hardware replacement for both the data and management planes and only requires the bare minimum capacity overheads to do so.

If a vendor led with any of the below statements (all true of vSAN), I bet the conversation would come to an abrupt halt.

- A single SSD is a single point of failure and causes multiple drives to concurrently go offline and we need to rebuild all that data

- We strongly recommend keeping 25-30% free “slack space” capacity in the cluster

- Rebuilds are a slow, One to One operation and in some cases do not start for 60 mins.

- In the event of a node failure in a three node vSAN cluster, data is not re-protected and remains at risk until the node is replaced AND the rebuild is complete.

When choosing a HCI product, consider it’s self healing capabilities for both the data and management layers as both are critical to the resiliency of your infrastructure. Don’t put yourself at risk of downtime by being dependant on hardware replacements being delivered in a timely manner. We’ve all experienced or at least heard of horror stories where vendor HW replacement SLAs have not been met due to parts not being available, so be smart, choose a platform which minimises risk by fully self healing.

Index:

Part 1 – Node failure rebuild performance

Part 2 – Converting from RF2 to RF3

Part 3 – Node failure rebuild performance with RF3

Part 4 – Converting RF3 to Erasure Coding (EC-X)

Part 5 – Read I/O during CVM maintenance or failures

Part 6 – Write I/O during CVM maintenance or failures

Part 7 – Read & Write I/O during Hypervisor upgrades

Part 8 – Node failure rebuild performance with RF3 & Erasure Coding (EC-X)

Part 9 – Self healing

Part 10: Nutanix Resiliency – Part 10 – Disk Scrubbing / Checksums